This article is part of Demystifying AI, a series of posts that (try to) disambiguate the jargon and myths surrounding AI.

Since the early days of artificial intelligence, computer scientists have been dreaming of creating machines that can see and understand the world as we do. The efforts have led to the emergence of computer vision, a vast subfield of AI and computer science that deals with processing the content of visual data.

In recent years, computer vision has taken great leaps thanks to advances in deep learning and artificial neural networks. Deep learning is a branch of AI that is especially good at processing unstructured data such as images and videos.

These advances have paved the way for boosting the use of computer vision in existing domains and introducing it to new ones. In many cases, computer vision algorithms have become a very important component of the applications we use every day.

A few notes on the current state of computer vision

Before becoming too excited about advances in computer vision, it’s important to understand the limits of current AI technologies. While improvements are significant, we are still very far from having computer vision algorithms that can make sense of photos and videos in the same way as humans do.

For the time being, deep neural networks, the meat-and-potatoes of computer vision systems, are very good at matching patterns at the pixel level. They’re particularly efficient at classifying images and localizing objects in images. But when it comes to understanding the context of visual data and describing the relationship between different objects, they fail miserably.

Recent work done in the field shows the limits of computer vision algorithms and the need for new evaluation methods. Nonetheless, the current applications of computer vision show how much can be accomplished with pattern matching alone. In this post, we’ll explore some of these applications, but we will also discuss their limits.

Commercial applications of computer vision

You’re using computer vision applications every day, maybe without noticing it in some cases. The following are some of the practical and popular applications of computer vision that are making life fun and convenient.

Image search

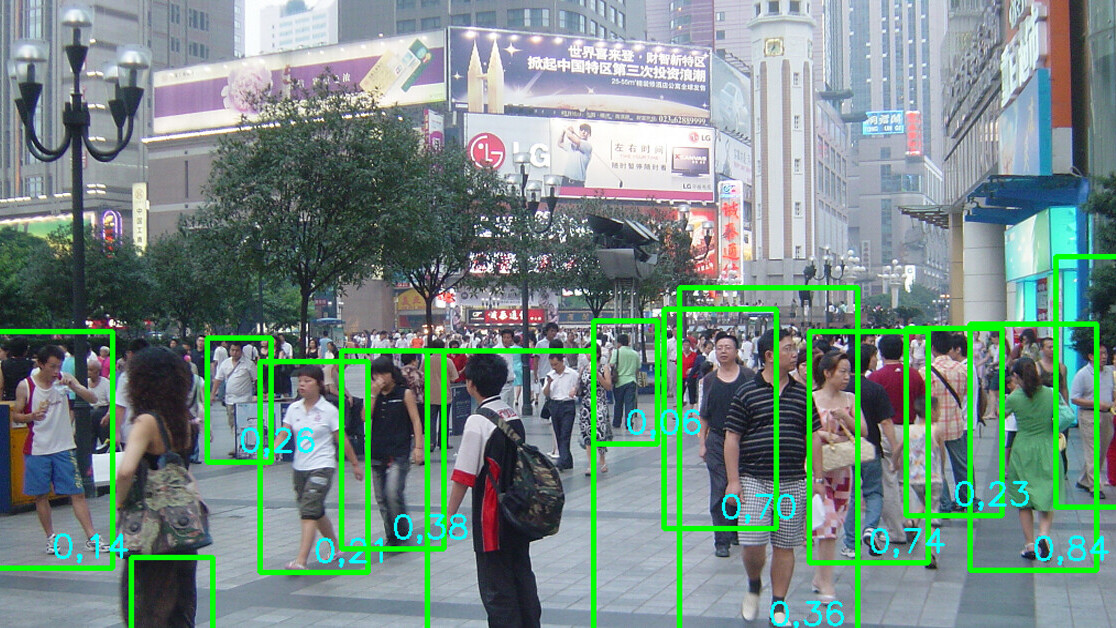

One of the areas where computer vision has made huge progress is image classification and object detection. A neural network trained on enough labeled data will be able to detect and highlight a wide range of objects with impressive accuracy.

Few companies that match Google’s vast store of user data. And the company has been using its virtually limitless (and ever-growing) repository of user data to develop some of the most efficient AI models. When you upload photos in Google Photos, it uses its computer vision algorithms to annotate them with content information about scenes, objects, and persons. You can then search your images based on this information.

For instance, if you search for “dog,” Google will automatically return all images in your library that contain dogs.

Google’s image recognition isn’t perfect, however. In one incident, the computer vision algorithm mistakenly tagged a picture of two dark-skinned people as “gorilla,” causing embarrassment for the company.

Google also uses computer vision to extract text from images in your library, Drive, and Gmail attachments. For instance, when you search a term in your inbox, Gmail will also look in the texts in images. A while back, I searched my home address in Gmail and got an email with an image attachment that contained an Amazon package with my address in it.

Read: [AI helps eliminate radiation exposure in breast cancer screening]

Image editing and enhancement

Many companies are now using machine learning to provide automated enhancements to photos. Google’s line of Pixel phones use on-device neural networks to make automatic enhancement such as white balancing and add effects such as blurring the background.

Another remarkable improvement that advances in computer vision have ushered in is smart zooming. Traditional zooming features usually make images blurry because they fill the enlarged areas by interpolating between pixels. Instead of enlarging pixels, computer vision-based zooming focuses on features such as edges, patterns. This approach results in crisper images.

Many startups and longstanding graphics companies have turned to deep learning to make enhancements to images and videos. Adobe’s Enhance Details technology, featured in Lightroom CC, uses machine learning to create sharper zoomed images.

Image editing tool Pixelmator Pro sports an ML Super Resolution feature, which uses a convolutional neural network to provide crisp zoom and enhance.

Facial recognition applications

Until not long ago, facial recognition was a clunky and expensive technology limited to police research labs. But in recent years, thanks to advances in computer vision algorithms, facial recognition has found its way into various computing devices.

iPhone X introduced FaceID, an authentication system that uses an on-device neural network to unlock the phone when it sees its owner’s face. During setup, FaceID trains its AI model on the face of the owner and works decently under different lighting conditions, facial hair, haircuts, hats, and glasses.

In China, many stores are now using facial recognition technology to provide a smoother payment experience to customers (at the price of their privacy though). Instead of using credit cards or mobile payment apps, customers only need to show their face to a computer vision-equipped camera.

Despite the advances, however, current facial recognition is not perfect. AI and security researchers have found numerous ways to cause facial recognition systems to make mistakes. In one case, researchers at Carnegie Mellon University showed that by wearing specially crafted glasses, they could fool facial recognition systems to mistake them for celebrities.

Data efficient home security

With the chaotic growth of the internet of things (IoT), internet-connected home security cameras have grown in popularity. You can now easily install security cameras and monitor your home online at any time.

Each camera sends a lot of data to the cloud. But most of the footage recorded by security cameras is irrelevant, causing a large waste of network, storage, and electricity resources. Computer vision algorithms can enable home security camera to become more efficient in the usage of these resources.

The smart cameras remain idle until they detect an object or movement in their video feed, after which they can start sending data to the cloud or sending alerts to the camera’s owner. Note, however, that computer vision is still not very good at understanding context. So don’t expect it to tell between benign movements (e.g., a ball rolling across the room) and things that need your attention (e.g., a thief breaking into your home).

Interacting with the real world

Augmented reality, the technique of overlaying real-world videos and images with virtual objects, has become a growing market in the past few years. AR owes much of its expansion to advances in computer vision algorithms. AR apps use machine learning to detect and track the target locations and objects where they place their virtual objects. You can see the combination of AR and computer vision in many applications, such as Snapchat filters and Warby Parker’s Virtual Try-On.

Computer vision also enables you to extract information from the real world through the lens of your phone’s camera. A very remarkable example is Google Lens, which uses computer vision algorithms to perform a variety of tasks, such as reading business cards, detecting the style of furniture and clothes, translating street signs, and connecting your phone to wi-fi networks based on router labels.

Advanced applications of computer vision

Thanks to advances in deep learning, computer vision is now solving problems that were previously very hard or even impossible for computers to tackle. In some cases, well-trained computer vision algorithms can perform on par with humans that have years of experience and training.

Medical image processing

Before deep learning, creating computer vision algorithms that could process medical images required extensive efforts from software engineers and subject matter experts. They had to cooperate to develop code that extracted relevant features from radiology images and then examine them for diagnosis. (AI researcher Jeremy Howard has an interesting discussion on this.)

Deep learning algorithms provide end-to-end solutions that make the process very easier. The engineers create the right neural network structure and then train it on x-rays, MRI images or CT scans annotated with the outcomes. The neural network then finds the relevant features associated with each outcome and can then diagnose future images with impressive accuracy.

Computer vision has found its way into many areas of medicine, including cancer detection and prediction, radiology, diabetic retinopathy.

Some AI researchers have gone as far as saying deep learning will soon replace radiologists. But those who have experience in the field beg to differ. There’s much more to diagnosing and treating diseases than looking at slides and images. And let’s not forget that deep learning extracts patterns from pixels—it does not replicate all the functions of a human doctor.

Playing games

Teaching computers to play games has always been a hot area of AI research. Most game-playing programs use reinforcement learning, an AI technique that develops its behavior through trial and error.

Computer vision algorithms play an important role in helping these programs parse the content of the game’s graphics. One thing to note, however, is that in many cases, the graphics are “dumbed down” or simplified to make it easier for the neural networks to make sense of them. Also, for the moment, AI algorithms need huge amounts of data to learn games. For instance, OpenAI’s Dota-playing AI had to go through 45,000 years’ worth of gameplay to achieve champion level.

Cashier-less stores

In 2016, Amazon introduced Go, a store where you could walk in, pick up whatever you want, and walk out without getting arrested for shoplifting. Go used various artificial intelligence systems to obviate the need for cashiers.

As customers move about the store, cameras equipped with advanced computer vision algorithms monitor their behavior and keep track of the items they pick up or return to shelves. When they leave the store, their shopping cart is automatically charged to their Amazon account.

Three years after the announcement, Amazon has opened 18 Go stores and it’s still a work in progress. But there are promising signs that computer vision (helped with other technologies) will one day make checkout lines a thing of the past.

Self-driving cars

Cars that can navigate roads without human drivers have been one of the longest standing dreams and biggest challenges of the AI community. Today, we’re still very far from having self-driving cars that can navigate any road on various lighting and weather conditions. But we have made a lot of progress thanks to advances in deep neural networks.

One of the biggest challenges of creating self-driving cars enabling them to make sense of their surroundings. While different companies are tackling the problem in various ways, one thing that is constant among them is computer vision technology.

Cameras installed around the vehicle monitor the car’s environment. Deep neural networks parse the footage and extract information about surrounding objects and people. That information is combined with data from other equipment such as lidars to create a map of the area and help the car navigate roads and avoid collisions.

Creepy applications of computer vision

Like all other technologies, not everything about artificial intelligence is pleasant. Advanced computer vision algorithms can scale up malicious uses. Here are some of the applications of computer vision that have caused concern.

Surveillance

It is not only phone and computer makers who are interested in facial recognition technology. In fact, the biggest customers of facial recognition technology are government agencies who have a vested interest in using the technology to automatically identify criminals in security camera footage.

But the question is, where do you draw the line between national security and citizen privacy? China shows how too much of the former and too little of the former can result in a state of surveillance that gives too much control to the government. The widespread use of security cameras powered by facial recognition technology enables the government to closely track the movements of millions of citizens, whether they are criminal suspects or not.

In the U.S. and Europe, things are a bit more complicated. Tech companies have faced resistance from their employees and digital rights activists in providing facial recognition technology to law enforcement. Some states and cities in the U.S. have banned the public use of facial recognition.

Autonomous weapons

Computer vision can also give eyes to weapons. Military drones can use AI algorithms to identify objects and pick out targets. In the past few years, there’s been a lot of controversy over the use of AI by the military. Google had to call off the renewal of its contract to develop computer vision technology for the Department of Defense after it faced criticism from its employees.

For the moment, there are still no autonomous weapons. Most military institutions are using AI and computer vision in systems that have a human in the loop.

But there’s fear that with advances in computer vision and greater engagement of the military sector, it’s only a matter of time before we have weapons that choose their own targets and pull the trigger without a human making the decision.

Renowned computer scientist and AI researcher Stuart Russell has founded an organization dedicated to stopping the development of autonomous weapons.

This story is republished from TechTalks, the blog that explores how technology is solving problems… and creating new ones. Like them on Facebook here and follow them down here:

Get the TNW newsletter

Get the most important tech news in your inbox each week.