People in India watch a lot of videos on the internet. According to a report from The Wall Street Journal, Indians spend more than 8.5GB of mobile data on average, and most of it on video. Last year, YouTube said more than 95% of content consumption is in regional languages. So naturally, there’s a lot of appetite for vernacular videos, but not all creators know all Indic languages.

One solution is dubbing. Last week, just after Parasite won the Oscar award, Mother Jones claimed dubbing is superior than translated subtitles. But let me tell you it sucks. I’ve seen plenty of English language movies dubbed in Hindi, and I either can’t stand them or I die laughing. Lip-syncing is often off, and dubbing seems quite unnatural.

[Read: New Zealand’s first AI police officer reports for duty]

Now, researchers from the International Institute of Information Technology from the southern city of Hyderabad, India have developed a new AI model that translates and lip-syncs a video from one language to another with great accuracy.

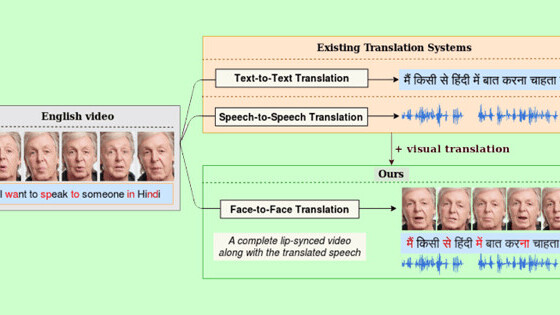

The team said we already have the technology to get translated textual or speech output from a video. However, visual translation — such as the movement of lips — is lost during dubbing. To solve that, Indian researchers have developed a new Generative Adversarial Network (GAN) called LipGAN. While it can match lip movements of translated text in an original video, it can also correct lip movements in a dubbed movie.

To translate videos, the model transcribes speech in the video using speech recognition. Then it uses a specialized model trained for Indic languages to translate the text, for example from English to Hindi. Then the speech recognition model converts it into voice. The speech-to-speech translation coupled with LipGAN forms the whole model. Researchers noted that their translation model was more accurate than Google Translate.

Prof. C.V. Jawahar, Dean, Research and Development, IIIT-H, said this technology will help in creating more content in regional languages:

Manually creating vernacular content from scratch, or even manually translating and dubbing existing videos will not scale at the rate of digital content is being created. That’s why we want it to be completely automatic.

There are superbly created videos on various topics by MIT and other prestigious institutions that are inaccessible to a larger Indian audience simply because they cannot comprehend the accent(s). Forget the rural folks, even I won’t understand!

The team added the model still struggles with moving or multiple faces in videos. Apart from addressing these problems, the team wants to work on improving expressions on face after translation.

We’ve seen plenty of efforts of GANs recreating face or body movement using just a single photo. But most of that research is in a single language; most likely to be English. It has taken a couple of iterations for these AI models to become quite convincing to the human eye. So, while the above model has its drawbacks, it’s one of the few GANs working on a multilingual model. It can definitely improve over time with more data.

Last year, deepfakes were in news for its negative use. But, video modification AI for educational or entertainment purposes is quite an important use case of that technology. This model is a perfect example of that.

You can read the full paper here.

You’re here because you want to learn more about artificial intelligence. So do we. So this summer, we’re bringing Neural to TNW Conference 2020, where we will host a vibrant program dedicated exclusively to AI. With keynotes by experts from companies like Spotify, RSA, and Medium, our Neural track will take a deep dive into new innovations, ethical problems, and how AI can transform businesses. Get your early bird ticket and check out the full Neural track.

Get the TNW newsletter

Get the most important tech news in your inbox each week.