The noise you’re about to hear wasn’t cut from the upcoming Ring remake. I didn’t steal it from a god-fucking-awful Eli Roth film. It was created by security researchers, trying to find ways to trick the voice-assistant systems we all use.

The researchers, who were based at UC Berkley and Georgetown University, examined the way in which voice-recognition based AI assistants popular on most modern smartphones could be used as an attack vector.

The attack used voice commands that were intentionally obfuscated, so that a human listener would be unable to identify what they are, but would be recognizable by a voice-recognition algorithm.

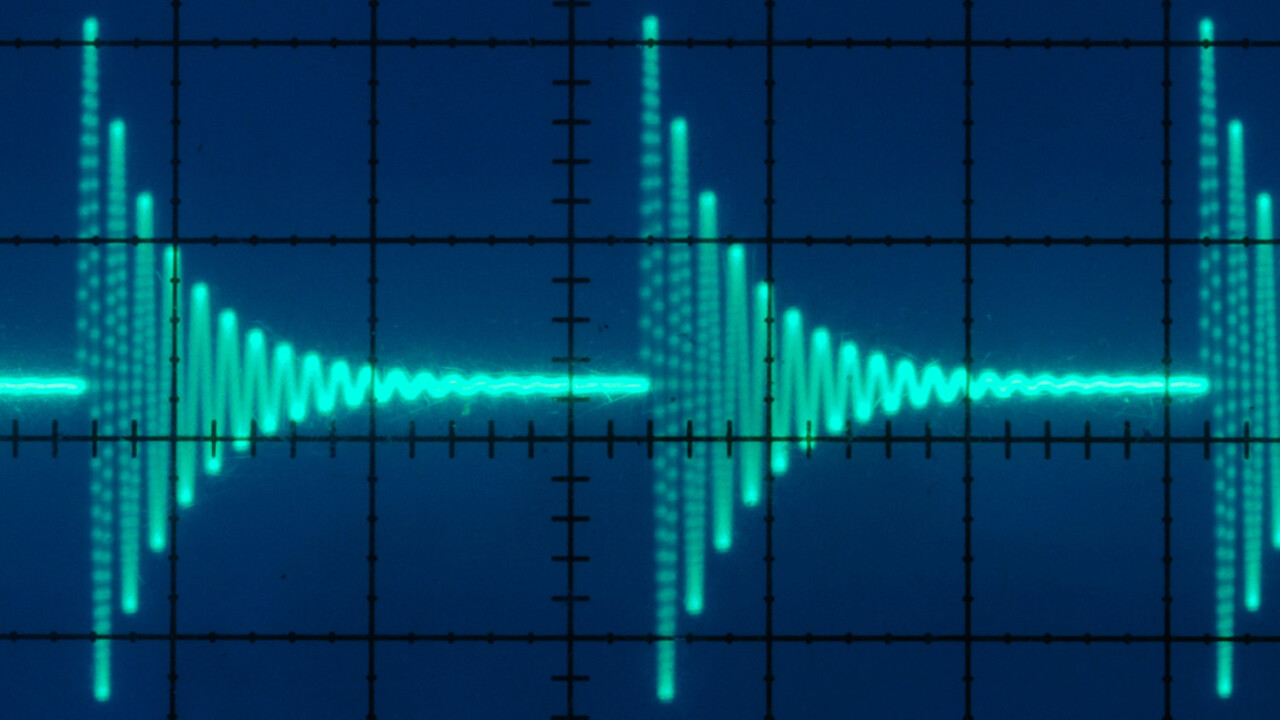

By playing one of these in the vicinity of a smartphone, you can coerce it into performing certain actions, as demonstrated below.

The research paper and the accompanying piece in The Atlantic are both fascinating reads that illustrate the frailty of these systems, and the lack of any real security protections afforded by them. But this isn’t a secret though.

There are plenty of examples where trusting AIs have gotten their owners into trouble. Just last month we reported on how one news broadcast caused dozens of Amazon Echo devices to order expensive doll-houses, just because a presenter said “Alexa ordered me a dollhouse”.

And until Apple, Google, and their ilk introduce some kind of authentication features, these problems will only exarcerbate.

Get the TNW newsletter

Get the most important tech news in your inbox each week.