When I was a kid, I briefly held the aspiration of making my own cartoons. I dabbled in the animation portion of Flash and made a few cheesy .flvs, but soon realized I wasn’t up to the task of dealing with keyframes and drawing pretty vectors.

Maybe if I’d started in 2018, things would’ve gone differently. Increasingly powerful technology and the advent of AI make it easier to get your feet wet in animation than ever.

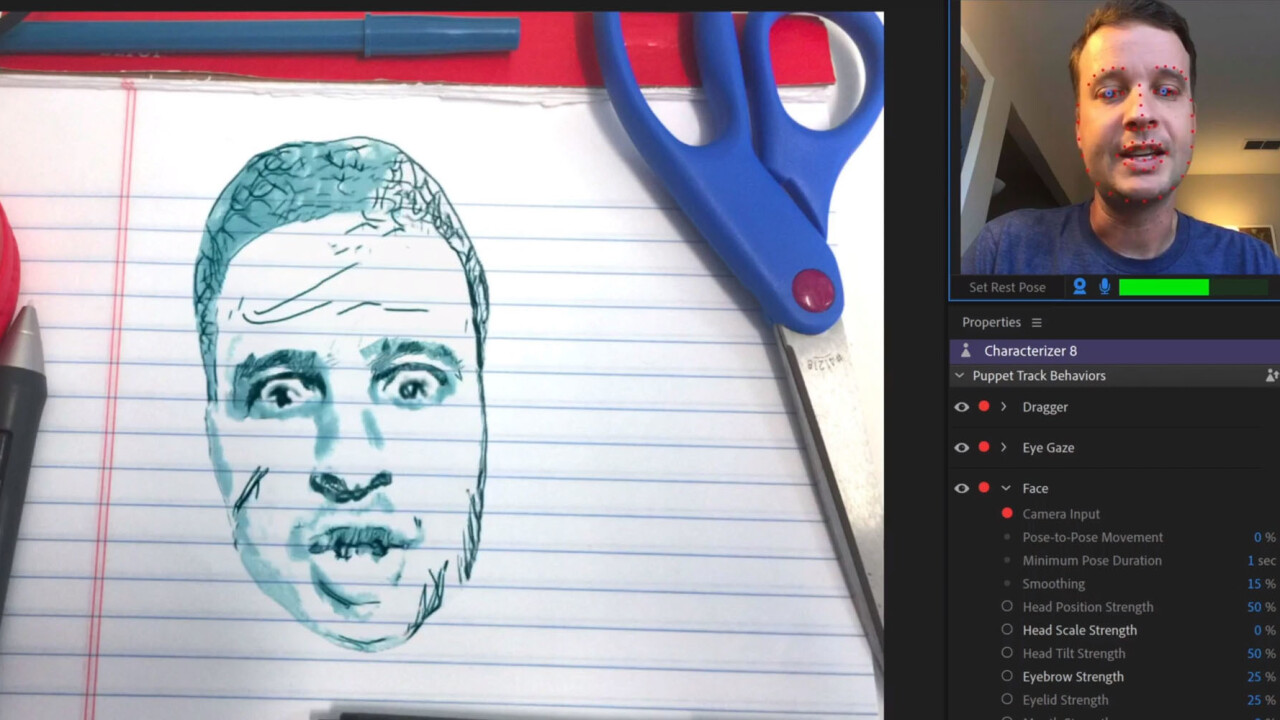

Case in point: Adobe is releasing an impressive tool called Characterizer, that can combine your face with reference artwork to create your own motion-capture puppet. A feature that began its life as a research project and tech demo, it’s being integrated into Adobe’s Character Animator app starting today.

Sirr Less, Senior Product Manager of Animation, walked me through the process. First, the app captures several images of your face making a variety of expressions for accurate motion tracking. Then you feed it a piece of art, such as a portrait or cartoon, to determine the visual style of your character – kind of like that Prisma app, but for animation.

Adobe’s Sensei AI analyzes the images, and within a minute or two, the app will have combined your face with the artwork. As with most AI-powered visuals, the effectiveness of the style transfer will vary, but in the live demos I saw, Characterizer performed an eerily good job or rendering Less’ face in a variety of styles.

But unlike a basic style transfer on an image or video, Characterizer renders a fully editable puppet, including the same control points and adjustable layers you’d have with any Character Animator puppet. This means your puppet doesn’t have to look you, nor the portrait you may have initially referenced. The tool simply provides you with a powerful starting point.

Characterizer isn’t totally new, mind you; the feature was first introduced during Adobe’s Sneak Peeks session at Max last year, though it was then called ‘Puppetron.’

It’s just one example of the winding journey new features often take at Adobe.

I’d always assumed that new features began life as carefully curated projects for product teams at Adobe. But Stephen DiVerdi, a Senior Research Scientist at the company, tells me that most ‘sneaks’ actually begin as projects for research interns.

These get whittled down into a handful which are then shown off at Adobe Max. The most popular ones – or the ones that best align with current product goals – often end up becoming real features. Sometimes, they become their own apps, which in turn get their own sneaks.

Such was the thread with Characterizer, which began as a sneak for Character Animator, which was in turn a sneak for After Effects called Project Animal back in 2014.

Other examples of sneaks that could eventually make their way to the real world include content-aware fill for video (Project Cloak), the ability to swap out skies in photos (Sky Replace), or editing a voice clip by typing new words (VoCo).

DiVerdi tells me that the number of sneaks submissions have increased over the years thanks to a growing research team and the advent of AI. The big theme at MAX this year is allowing AI to empower us to be more creative, and features like Characterizer show we’re only scratching the surface of what’s possible so far.

Adobe will be revealing its next batch of sneaks at MAX today, so stay tuned for whatever else the company has in store. It might just end up being the next feature you rely on.

Get the TNW newsletter

Get the most important tech news in your inbox each week.