With a billion people worldwide living with some form of disability, there is tremendous scope for the development of assistive technologies – a market expected be worth over $26 Billion by 2024.

In the next 10 years, billions of IoT smart devices will be connected. AI will enable these devides to listen, see, reason and predict without a 24/7 dependence on the cloud (this physical interface between humans and machines is what Microsoft terms the “intelligent edge”) and the average smart home will generate around 50GB of data every single day.

Artificial Intelligence (AI) is a crucial part of that puzzle, as AI advances such as predictive text, visual recognition and speech-to-text transcription are already showing enormous potential for helping people with vision, hearing, cognitive, learning, mobility disabilities – as well as a range of mental health conditions.

“AI can be a game changer for people with disabilities. Already we’re witnessing this as people with disabilities expand their use of computers to hear, see and reason with impressive accuracy,” explains Brad Smith, President and CLO at Microsoft.

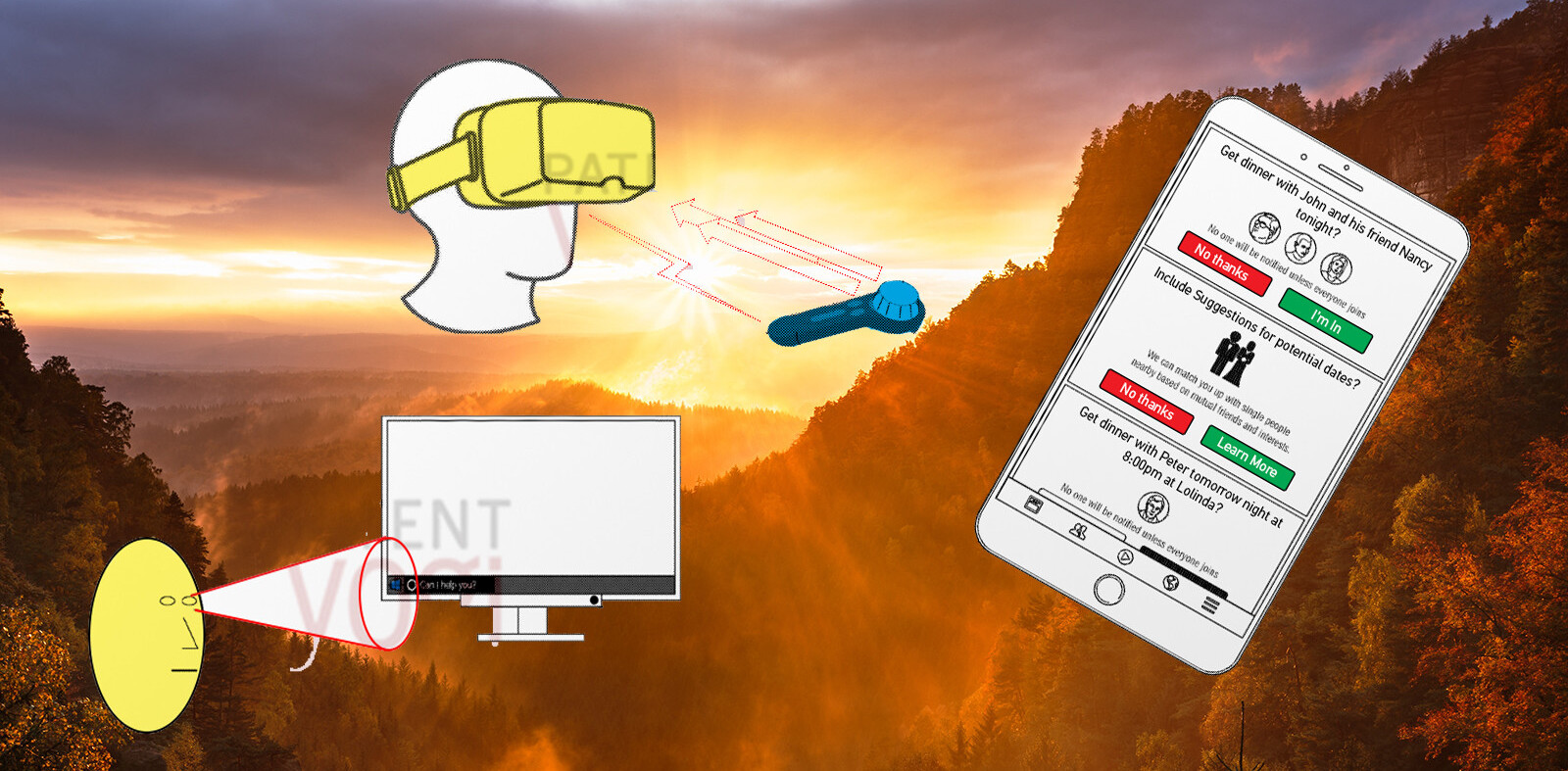

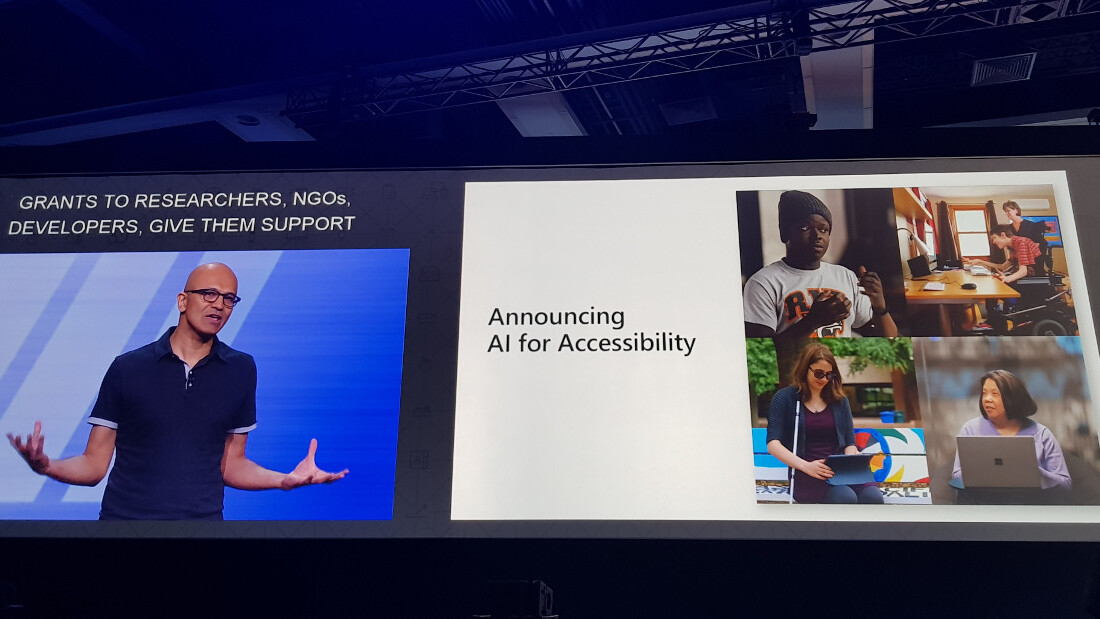

This week at the company’s annual Build developer conference in Seattle, Microsoft announced the launch of AI for Accessibility, a new $25 million 5-year program aimed at accelerating the development of accessible and intelligent AI solutions. The aim is to enable disabled users to achieve more in three specific scenarios: Employment, Modern Life and Human Connection. The program comprises grants, technology investments and expertise, with innovations integrated into Microsoft Cloud services.

“Around the world, only one in 10 people with disabilities has access to assistive technologies and products. By making AI solutions more widely available, we believe technology can have a broad impact on this important community,” says Smith.

The AI for Accessibility program will provide seed grants of technology to developers, universities, nongovernmental organizations, and inventors taking an AI-first approach focused on creating solutions in those areas.

It will also identify projects that show the most promise and make larger investments of technology and access to Microsoft AI experts to help bring them to scale, as well as working with external partners to incorporate AI innovations into platform level services.

The Program will be run by Jenny Lay-Flurrie, Microsoft’s Chief Accessibility Officer, who has been credited with achieving a cultural change change within the company. Lay-Flurrie, who is deaf, was named a “Champion of Change” by the White House for her work and created a hiring program through which the company identifies and trains people with autism.

“We have started to see the impact AI can have in accelerating accessible technology. Microsoft Translator is today empowering people who are deaf or hard-of-hearing with real-time captioning of conversations. Helpicto, an application that turns voice commands into images, is enabling children in France with autism to better understand situations and communicate with others,” says Smith.

How that works in practice was poignantly showcased during Monday’s keynote, where Eric Bridges, CEO of the American Council of the Blind, talked about using the Seeing AI talking camera app on a daily basis to help his three-year-old son, Tyler to complete his schoolwork. Eric now uses the app to scan Tyler’s work so he can review it, but just two years ago, that same interaction would have required the assistance of a sighted person. Technology has, quite literally, removed that barrier so that a father can interact directly with his child, and that’s the fundamental call to action that the program is putting out for developers, NGO’s, academics, researchers and inventors – to accelerate their work for people with disabilities as they develop new technology products.

But while all this brings enormous possibilities for supporting vulnerable people and assisting disabled users in their everyday lives, Smith stresses that by innovating for people with disabilities, they are better innovating for us all – something that Lay-Flurrie herself helped to demonstrate on stage at Build.

The live demo involved showcasing how AI would help facilitate better collaboration and efficiencies in the workplace, using features such as facial recognition and live caption and translation technology. Lay-Flurrie joined the meeting with her sign language interpreter at a later stage, and remarked that the fact that the meeting was being captioned allowed her to participate more fully as she didn’t have to worry about constantly taking notes. Although that might prove more difficult for someone with a disability such as hers, it is certainly something that most people would find rather useful in such a scenario.

“By ensuring that technology fulfills its promise to address the broadest societal needs, we can empower everyone – not just individuals with disabilities – to achieve more,” Smith concludes.

Get the TNW newsletter

Get the most important tech news in your inbox each week.