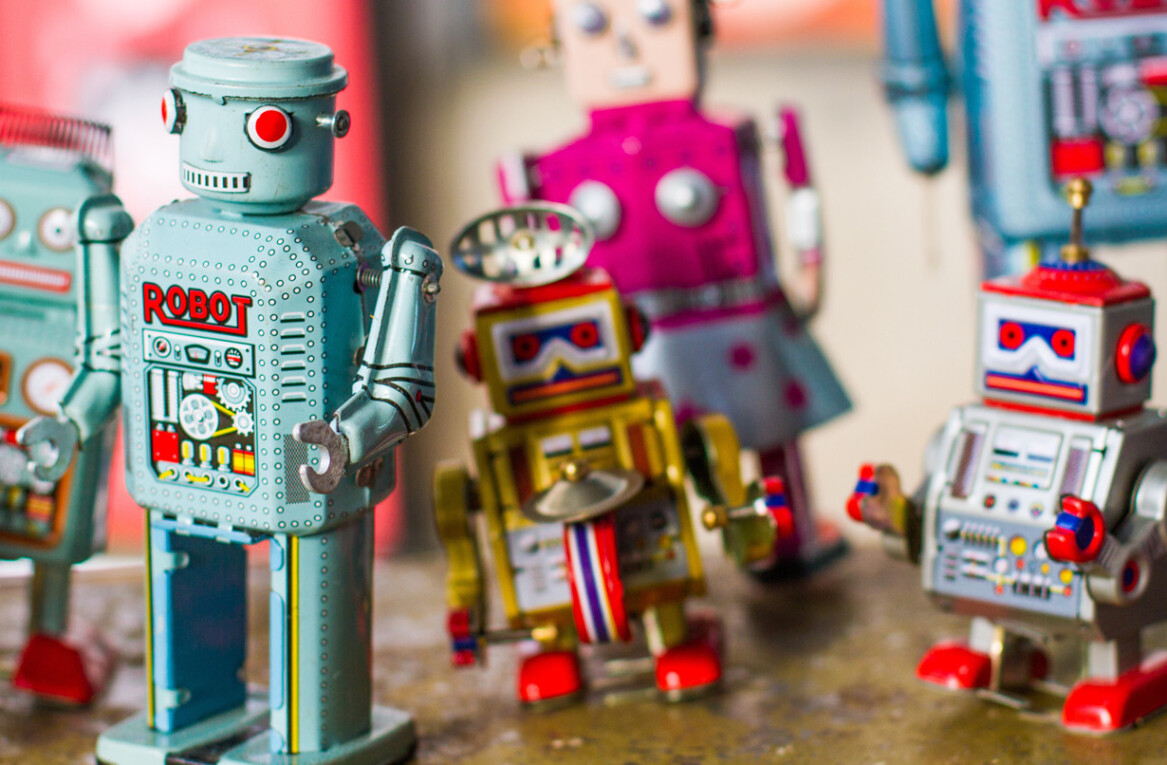

30 years ago, Peter Weller took on the role as Robocop, a dystopian entity revived by mega corporation Omni Consumer Products (OCP) as a superhuman law enforcer running the Detroit Police Department. Badass cop, but maybe not the most effective one.

Robocop had a fourth classified law never to arrest or attack anyone from OCP — the company that made it. But gone are the days when this sci-fi action film was perceived as just another fanatical blockbuster. We have entered an era that some perceive as concerningly familiar — except in this version of events, tech giants YouTube, Facebook, Google, and Twitter are perceived as the Robocop entity policing the internet and its content.

The dark web

We’ve all heard of the perils attached to the ‘dark web’, with gun trading, drug deals, and other black-market activity taking place under the condition of anonymity. Even on the ‘surface’ side of the web, we’re seeing violent language, extremist content, and radical groups springing up while fake news is spread to distort current affairs to suit the agendas of those distributing it.

So, whose responsibility should it be to deal with such a complex web of issues? It inevitably lies in the hands of the tech giants that currently rule the internet.

YouTube recently announced that its use of machine learning has doubled the number of videos removed for violent extremism, while Facebook has announced that it’s using artificial intelligence to combat terrorist propaganda. Both YouTube and Facebook have also proclaimed work with Twitter and Microsoft to fight online terrorism by “sharing the best technological and operational elements.”

The human nature of decision making

While it is of course welcomed news that these firms are doing their part to make the internet a safer place, they have to ensure they sustain a democratic approach to their ‘policing’.

Planet Earth consists of 196 countries and 7.2 billion people. There’s no one aligned culture, experience, religion, or government. Considering the sheer breadth of differences among civilization, it’s an undeniable fact that when humans are left to make such decisions, these will be based on their own subjective opinion influenced by surroundings. This is human nature.

So, when considering complex issues such as combatting ‘violent extremism’ online, how are employees of these big tech firms supposed to come to an informed decision, upon what isn’t and what is classified as extremist?

As thing are now, the decisions will undoubtedly be influenced by their own experiences and subjective bias.

AI and machine learning as a solution

Machine learning and AI can remove the interference of any biased decision-making. Machines can make thousands of decisions about the content sentiment and, if threatening, remove it before humans are exposed.

In my media world, AI is used to help brands understand where they should buy media. And in an industry where human bias in this decision-making process has caused a lack of trust, AI can step in and remedy that.

Based on input from news sources, labels, and articles; machines can interpret the meaning of violent extremism. Using this, organisations can then combat inherent issues with the web — anything from fake news to criminal behavior and extremist content.

At this point, I can hear the critics typing away their concerns in the comments to this article. What about when the machine becomes so intelligent it can do the job without human input? What happens when the machine receives emotions? What happens if someone gets hold of the AI machine and uses it to the detriment of others?

In order for this technology to create emotions or any sort of emotional feedback that gives it more control, this would require extremely elaborate data representation and programming that hasn’t yet been developed. There are parameters within which these machines can learn and everything that the machine can currently do goes back to executing a better performance on narrow tasks.

Where to next?

It’s up to the tech giants to ensure their policing of the internet is fair and impartial, and will not lead to the emergence of a real-life Robocop — and the way to achieve that is by using AI.

While 2017 has seen many stories that show these tech giants to be bullish in their approach — demonstrating a lack of trust and transparency between them and other internet users — the more recent announcements made by YouTube, Google, Facebook, Twitter, and Microsoft to rectify issues such as online extremism and terror content are certainly steps in the right direction.

As these firms come under political and societal pressure to invest heavily in helping make the internet great again, we can prevent the real-life emergence of RoboCop and instead use AI and machine learning to police the internet fairly and impartially. We can then create an online world that combats extremism and brings about greater societal change — which we desperately need at the moment.

By treating every single entity on the web equally when deciding what content is acceptable and unacceptable — including the movements of those who are predominantly in control — we will be able to bring the internet back to its original purpose: to inform, connect and educate.

Get the TNW newsletter

Get the most important tech news in your inbox each week.