Back in 2007, when London was booming as the financial capital of the world, a new field called “algorithmic trading” was emerging. In essence, it is about leveraging Artificial Intelligence to place bets on financials markets faster than any human can. Like most PhD students doing AI, I was working with banks to help them build their trading algorithms, which back then represented about 3% of their activity.

Fast forward to 2017, and this type of trading represents over 90% in some cases, almost completely replacing human traders in big banks. One of those victims turned out to be my own dad, a trader who worked passionately for over 40 years. He is now out of a job because people like me built the technology that replaced him.

Seeing my own dad lose the job he loved was a wakeup call that I had to do something to prevent this from happening to other people. This is why I decided to join the French government in defining their AI strategy, and make France a country where humans and machines can prosper together.

Alongside an amazing team from France Strategies and the French Digital Council, we interviewed more than 60 people from various industries to understand how they will be impacted, and published a report building on top of a recent study of automation of jobs in France.

As we were working on this, one thing became clear: the magnitude of the impact of AI in the next decade is way beyond anything we ever imagined. It is the real issue facing our government leaders today, as failure to transition to a sustainable AI society will lead to massive job loss and economic downturn.

We first need to handle Narrow AI before worrying about Super-Intelligence

The first thing to consider when looking at the impact of machines in our lives, is the type of AI we will actually deal with. The three common types are:

- Narrow AI: the ability for a machine to reproduce a specific human behavior, without consciousness. Basically it’s just a powerful tool to automate narrow tasks, like an algorithm would. Also called Weak AI.

- Strong AI: the ability for a machine to reproduce human intelligence fully, including abstraction, contextual adaption, etc.. Also called AGI, Full AI or General AI.

- Super-Intelligence: a strong AI that is more intelligent than the intelligence of all humans combined.

Today, we are only able to achieve Narrow AI. Strong AI and Super-Intelligence have captured our imagination, but we are still nowhere near achieving them. Can it be done one day? Maybe. But that would be missing the point that in the next decade, Narrow AI will already have destroyed our society if we don’t handle it correctly.

In fact, Narrow AI is already a very powerful tool. It can recognize what is in an image. It can beat the world champion at Go. It can reproduce the style of any master artist. It can understand natural language queries. It can automate your house and drive your car. It can generate images that look real. It can diagnose cancer better than a doctor. And it can recognize your kids in a picture better than you can yourself.

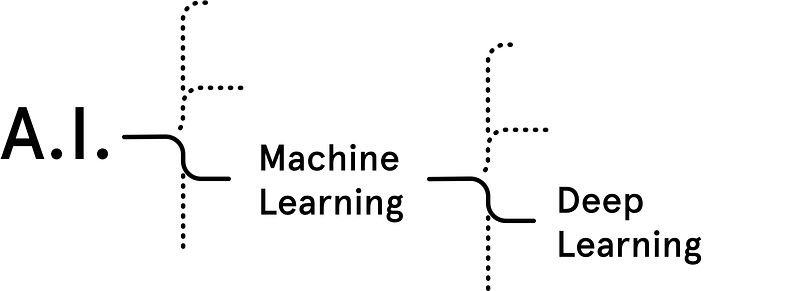

But at the end of the day, AI is just a technology, albeit one that is still hotly debated. Indeed, as AI becomes mainstream, companies are looking for ways to market it to more people, turning it into an umbrella term — just as they did with Big Data and Cloud Computing before. Here, we used the following definition, inspired by the six min intro to AI:

- Artificial Intelligence: the ability for a machine to reproduce human behavior. This is regardless of the technique used to achieve it.

- Machine Learning: a family of AI algorithms where machines learn from examples how to reproduce a given behavior. There are many types of machine learning algorithms: neural networks, support vector machines, decision trees, etc..

- Deep Leaning: a family of machine learning algorithms where successive layers of data in a neural network are combined to learn increasingly abstract concepts.

Basically, Deep Learning is a branch of Machine Learning, which is a branch of Artificial Intelligence.

Today, most people doing AI do Machine Learning, and most people doing Machine Learning do Deep Learning, so you would be forgiven for using them interchangeably.

The main reason why AI went mainstream recently is that for the first time, a combination of two exponential trends — cheap computing power and availability of large sets of data — made it possible to teach machines instead of programming them. Said differently, AI became possible because we invested massively into Cloud Computing and Big Data.

Indeed, up until recently, when we wanted to automate a task, we had to first understand its behavior in every detail, then write a computer program — an algorithm — to automate it. For instance, to make a machine that can recognize a cat, a human engineer had to figure out what makes a cat unique (pointy ears, fur, a tail, funny looks, ..), and then find a way to represent this in a computer program. The more complex the task, the more resources it required to be automated, assuming a human could understand it in the first place.

With AI on the other hand, there is no need to actually understand what’s going on, since the machine will learn to reproduce the behavior by itself. To do so, a human engineer compiles a large set of example data, and feeds it to a generic machine learning algorithm that then learns to reproduce the behavior in the examples.

The human engineer no longer has to understand what a cat is; she simply has to give the computer examples of cats and let it figure it out! And since collecting data is much easier and faster than understanding what’s going on, the rate at which we can automate a task is now orders of magnitude faster.

Humans and Machines must be thought of as complementary

This revolution in automation is unprecedented, and applies not just to low income jobs, but to any category. Given how widespread and fast the disruption will be, we have to stop thinking Man against Machine, and instead think Man + Machine.

For example, a medical doctor’s job is to collect data about the patient, make a diagnosis, find an appropriate treatment, and then help the patient get better by engaging on an emotional level. Since Narrow AI can already analyze and diagnose x-rays better than humans, find the most appropriate treatment and order more relevant tests, what will be the role of the doctor in 2022? Most likely, common illnesses will be treated by an AI doctor, while human doctors will figure out how to handle complex cases (requiring general intelligence), and helping patients recover (requiring emotional intelligence). Doctors won’t disappear, but their job will be transformed to become that of a medical researcher combined with a nurse.

As it turns out, many jobs will follow a similar pattern: they will be transformed rather than disappear. With this hypothesis in mind, we created a simple framework to determine the likelihood that a job will be automated. First, split the job into its tasks, including both those that are officially part of the job, and those that people do in practice. Then for each of these tasks, check how likely it is to be automated based on the following criteria:

- Is it technically doable? Sometimes the technology or data simply doesn’t exist, and thus, no matter how much we want to automate the task, it is actually impossible.

- Does it require complex manual intervention? As it turns out, robotics isn’t following the exponential trend of AI, mostly due to the time and cost of building and testing a robot. Something as simple as making coffee is out of reach for robots, since each machine is different and the required hand movements are very complex. This means that most non-repetitive manual jobs are safe.

- Is it socially acceptable? Just because a task is automatable doesn’t mean we want to actually automate it. A good example is a soccer referee. Current AI technologies could easily analyze the game in real time and apply the rules on the fly. But doing this would actually make the game boring, as it would stop constantly! The human referee has the ability to supersede the rules, judging from context whether to follow them or to let play.

- Does it require emotional intelligence? This one is obvious to most people. Some tasks require a subtle understanding of human emotion. And although a machine could show empathy, it won’t actually feel empathy. This is why a human doctor will always be better at delivering a diagnosis than a machine, and why human managers will always be better than AI ones!

- Does it require general intelligence? AIs today do much better than humans at specific, narrow tasks, but are unable to generalize what they learned to other problems, or adapt to context. For example, an autonomous car can learn to drive on a road better than a human, but won’t know how to handle improbable situations. This was brilliantly exemplified by an artist who created an autonomous car trap that consists of a dotted white circle surrounding a solid white circle. A human would immediately spot that this is absurd, but an AI would think “oh I can cross that dotted line”, only to be trapped inside because it wouldn’t be able to cross the solid line! Human: 1, AI: 0.

If more than 2/3 of tasks are automated, then the job will disappear. If less than 1/3 are automated, then nothing will change. In all other cases, the job will be transformed.

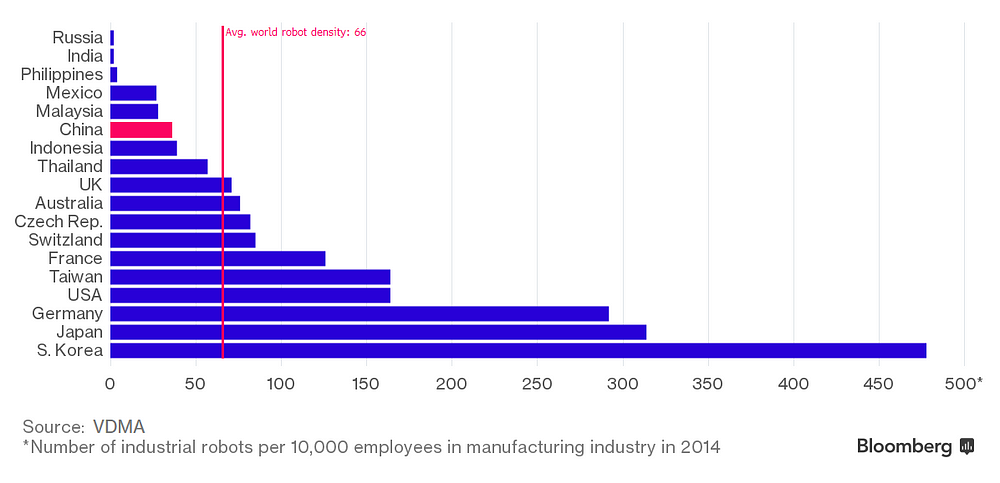

Of course, different countries will be impacted differently. For instance, South Korean, Japanese and German manufacturing are already heavily automated, and as such probably won’t suffer much. China in comparison has a lot more to lose.

A recent report by France’s Employment Council determined that in France, about 10% of jobs will disappear, 50% will be transformed, and the remaining 40% won’t change. Here are some examples for each:

- Jobs that will likely disappear: truck driver, taxi driver, train driver, radiologist, accountant, paralegal, financial trader, financial broker, help desk operator, real estate agent, news writer, unskilled factory worker, etc..

- Jobs that won’t change much: nurse, teacher, psychologist, team manager, creative, news analyst, consultant, research scientist, medical researcher, philosopher, designer, artist, artisan, chef, actor, etc..

- Jobs that will be transformed: claim manager, personal banker, software engineer, data scientist, medical doctor, skilled factory worker, lawyer, movie director, song writer, script writer, plumber, electrician, etc..

- Jobs that will be created: it’s quite hard to predict how many jobs will actually be created, but some are already starting to appear, such as the AI supervisor (who double checks the results of the AI), data labeler (most likely the factory job of the future, an extension of Mechanical Turks today), AI lawyer (who defends machine rights), voice designer (designing voice interfaces and brands instead of visual ones), etc..

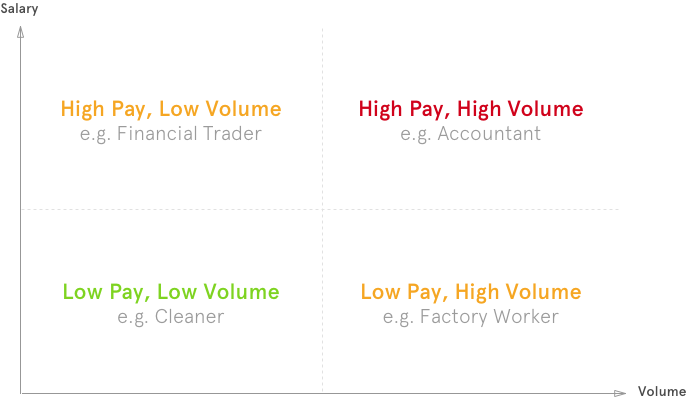

What is remarkable is that contrary to popular belief, many high paid jobs will also disappear, as we saw with financial traders. Given a job that is automatable and leads to clear productivity gains, the prioritization of which jobs to automate first will be a function of how well paid it is, and how many of such jobs there are. For instance, traders are few, but highly paid, and so will be disrupted as fast as factory workers who are many, but underpaid. The net cost reduction in both cases is similar, although the social cost is higher when more people lose their job.

Countries with strong welfare systems (such as France and northern Europe) will probably be able to handle the additional unemployment (unlike America and China), so the real issue will be around the 50% whose jobs will be transformed.

Solving the AI and Job crisis isn’t about solving mass unemployment. It’s about solving mass continuous education.

We already saw how a doctor’s job will change; in most cases, they won’t have to diagnose and figure out the right treatment since machines will do that better and faster. But they will still keep the human relationship to patients, and supervise the AI to make sure the results aren’t completely off. This has two profound implications: the first is that the power balance — and hence the difference in compensation — between medical professions will shift tremendously. The second is that we will need to find a way to continuously educate all those whose jobs will change, and let go of the traditional education model where we learn once and apply for the rest of our lives.

We must rethink the education system in the age of AI

The good thing about delegating tasks to AIs is that we won’t have to learn as many skills to do a job. This is because the most tedious bits, the hard skills (coding, maths, processes, etc.), are more likely to be automated than the soft skills (empathy, management, leadership, creativity, etc.). Given that soft skills are also more transferable from one job to another, it will take far less time to learn a new job in 2022 than it does today.

What this requires though is the ability to learn continuously, which in turn requires time and good educational resources. Rather than a four year degree, taking short classes (online or offline) and doing an internship will likely be enough for most jobs. We are already seeing this today, for example with Uber drivers or Data Scientists: official degrees typically get created after there is demand in the market, pushing them to learn by themselves and continuously improve their skills once confronted to real world problems.

Instead of being stuck in a career based on what we studied many years ago, we will study continuously throughout our lives and change jobs as easily as we move houses.

How can we promote continuous education? The first thing is to acknowledge that companies and governments will both need to work together to make it happen. For example in France, there is a national employment center (Pole Emploi). Everybody knows about it, and they have more reach than any private platform. If they became an education center with an online platform like Coursera, then private companies could contribute the content, for free or for a fee. This would combine the best of both worlds: the distribution capabilities of a government, and the real-world experience of private companies who will need to hire the people down the line.

There is an interesting collateral effect that might happen as well: as education becomes shorter and more accessible, people will be able to switch careers faster and easier. This in turn leads to more liquidity in the job market, as those needing a job will have more options, and those looking to hire won’t have to wait for someone with the right education. The supply and demand of jobs will be more balanced, meaning less welfare costs, more productivity, consumption, competitiveness and most importantly happiness.

Furthermore, as some jobs will likely be more disrupted than others, there is an opportunity to do preventive education, where people in high risk jobs can leave before getting laid off in order to transition to something new. How can they live if they are no longer working, you might ask. One solution is to take example on Universal Basic Income, but instead offer an Universal Education Income: a monthly salary given by the government to those studying, regardless of their situation. This would be complemented by a more comprehensive unemployment insurance, enabling people with families to quit their job to study again. Student loans and end-of-month financial struggles should not be the price to pay to be employable in the age of AI.

Of course we could all be wrong, and AI could be another fad. Maybe we will reach a plateau, or maybe we will flat out refuse to live alongside machines. But the stakes are simply too high to take that bet. And although it is too late for my dad, it isn’t for the rest of us: we can build a future where machines augment us instead of taking over!

Get the TNW newsletter

Get the most important tech news in your inbox each week.