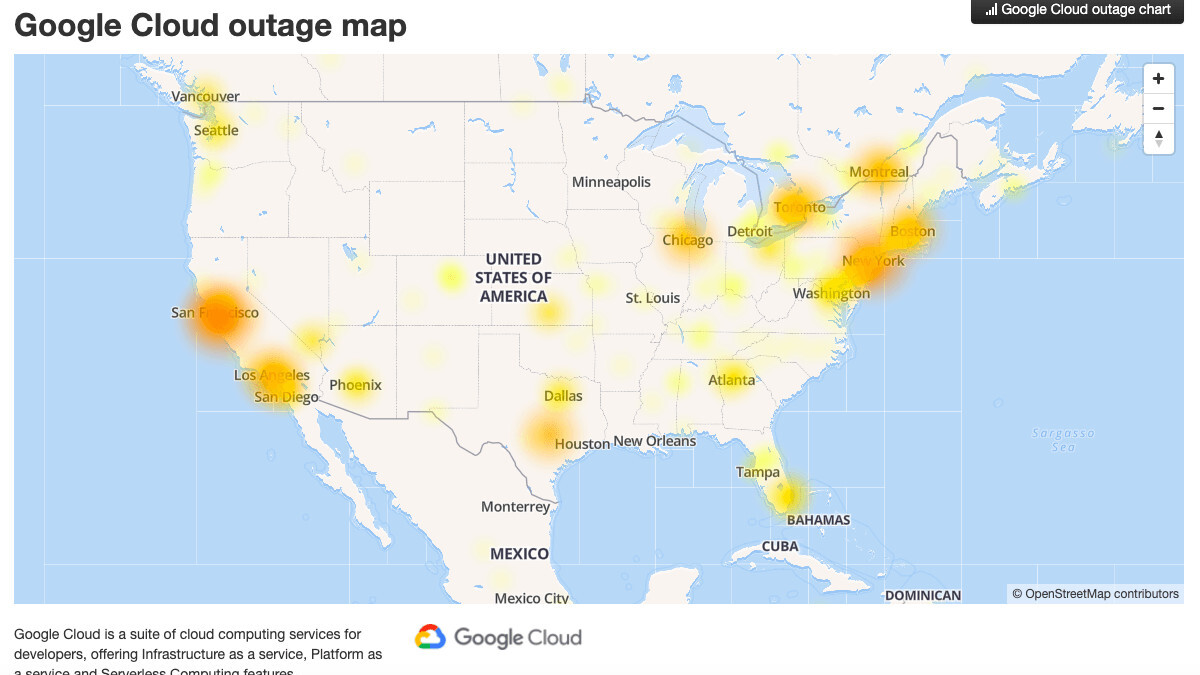

Gmail, YouTube and other services that rely on Google’s backend technology were disrupted for more than four hours on Sunday by what the company blamed on “high levels of network congestion.”

Apple iCloud, Snapchat, Nest, Discord, Vimeo, and a number of third-party applications that host their services on Google were affected as a result. Several of Google’s own apps like Admin Console, Google Sync for Mobile, and G Suite were also impacted.

The issues started around 12PM PT / 3PM ET, before being completely resolved around 5PM PT / 8PM ET.

Google’s G Suite Status Dashboard showed that there were problems with almost every single service at some point, barring Google+ and Google Cloud Search.

The internet giant didn’t elaborate on what caused the extended outage, but apologized for the inconvenience caused.

“We will conduct an internal investigation of this issue and make appropriate improvements to our systems to help prevent or minimize future recurrence. We will provide a detailed report of this incident once we have completed our internal investigation,” it said in the incident report.

Google’s cloud services have been down before. YouTube went offline a couple of times back in January and October, and a string of Google Cloud outages in July and November took down some of the world’s most popular applications like Snapchat, Spotify, and Nest.

The incident, although resolved, puts the reliability of Google cloud infrastructure into question. It also highlights the consequences of excessive reliance on one company for backend services.

With most companies offloading their entire backend to Google (or Amazon, or Microsoft), it also means they are fully dependent on one service in order to operate. It remains to be seen what the post-mortem of the disruption reveals.

We’re keeping an ear to the ground for more details on Google’s outage, and will update this post when we learn more.

Update (10:30 AM IST, 4 June 2019): Google has provided more details about the outage in a separate blog post. It said the issue stemmed from a server configuration change that led network traffic to be routed incorrectly. It also said the company’s engineering teams detected the issue quickly, but “diagnosis and correction” took longer than expected.

In essence, the root cause of Sunday’s disruption was a configuration change that was intended for a small number of servers in a single region. The configuration was incorrectly applied to a larger number of servers across several neighboring regions, and it caused those regions to stop using more than half of their available network capacity. The network traffic to/from those regions then tried to fit into the remaining network capacity, but it did not. The network became congested, and our networking systems correctly triaged the traffic overload and dropped larger, less latency-sensitive traffic in order to preserve smaller latency-sensitive traffic flows, much as urgent packages may be couriered by bicycle through even the worst traffic jam.

Update (12:30 PM IST, 11 June 2019): Google has offered more context surrounding the outage in a detailed incident report. In a note updated on June 6, the internet giant blamed the service disruption on “multiple failures.”

Two normally-benign misconfigurations, and a specific software bug, combined to initiate the outage: firstly, network control plane jobs and their supporting infrastructure in the impacted regions were configured to be stopped in the face of a maintenance event. Secondly, the multiple instances of cluster management software running the network control plane were marked as eligible for inclusion in a particular, relatively rare maintenance event type. Thirdly, the software initiating maintenance events had a specific bug, allowing it to deschedule multiple independent software clusters at once, crucially even if those clusters were in different physical locations.

The combined effect of these issues, which started at 11:45 AM US/Pacific, resulted in significant reduction in network capacity, with end-user impact began to be seen in the period 11:47-11:49 US/Pacific.

To prevent such failures going forward, Google has halted the use of automation software which deschedules jobs in the face of maintenance events. In addition, it said it will rework its network configurations so that it will reduce the recovery time required for such disruptions. It also added that the post-mortem “remains at a relatively early stage.”

We have updated the story to reflect the latest developments.

Get the TNW newsletter

Get the most important tech news in your inbox each week.