A trio of Samsung AI researchers recently developed a neural network capable of rendering photorealistic graphical scenes with a novel viewpoint from a video.

In the above video we see a 3D rendering of a complex scene. It was created by turning a video input into a bunch of points representing the geometry of the scene. The points, which form a cloud, are then fed to a neural network that renders them as computer graphics.

TNW reached out to Dmitry Ulyanov, a researcher at Samsung and one of the authors on the team’s paper, to find out more about the project. He told us:

The idea is to learn to render a scene from any standpoint. The only input is [a] user’s video of the scene made in a rather free manner. In the videos we show the rendering of the scene from a novel view (we take the trajectory of the camera from the train video and shift it by a certain margin … that’s how we generate the new trajectory).

Ulyanov worked with fellow researchers Kara-Ali Aliev and Victor Lempitsky to develop the network. Currently it can handle slight shifts in perspective, such as zooming or moving the “camera.” What you’re seeing in that above video isn’t the original input, but a rendering of it.

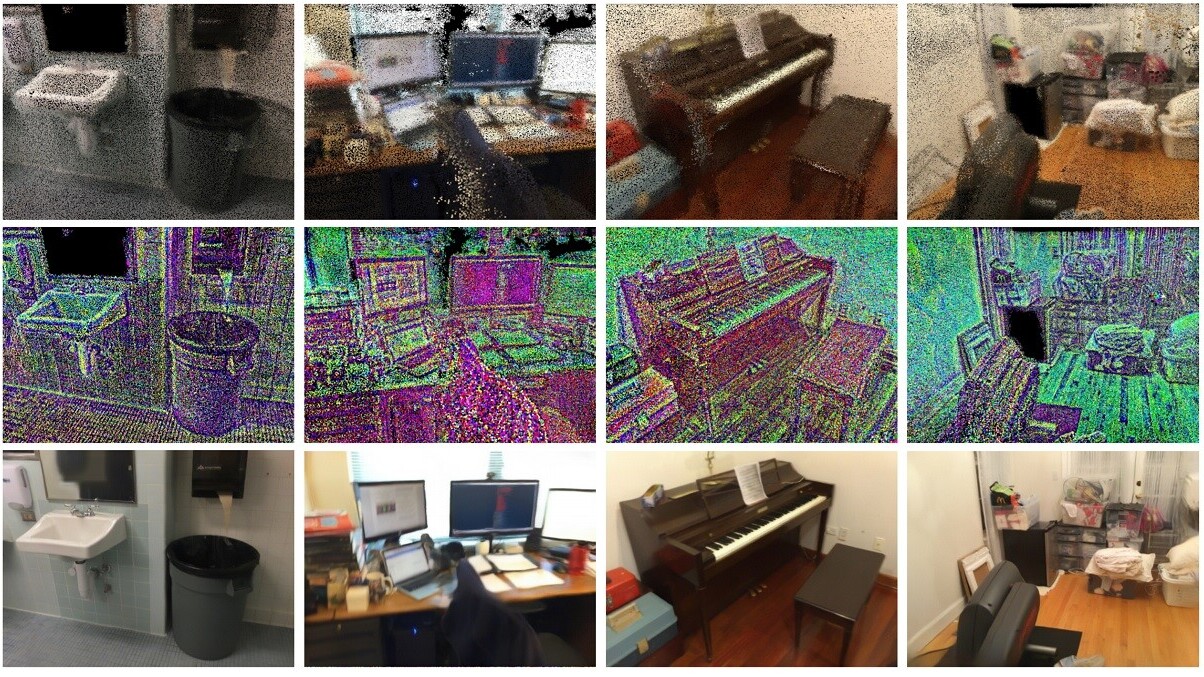

Check out these side-by-side pictures of both rendered and nearest ground-truth (the ones on the left were rendered by the AI, the ones on the right are the closest matching frames from the original video input):

Typically this kind of rendering is done using meshes – overlays that define the geometry of objects in space. Those methods are time-consuming, processor intensive, and require human expertise. But the neural point-based system creates results in a fraction of the time with a comparatively small amount of resources. Ulyanov told us:

Traditional methods for photo-realistic rendering like ray-tracing in fact require huge amount of compute. Two minutes is in fact a small wait time for the modellers, who work in software like Blender, 3DS Max, etc. They sometimes wait for rendering for hours. And in many cases their task is to reproduce a real scene! So they first spend time modelling and then wait for it to render each frame. And with the methods like ours you only need a video of the scene and wait for 10 minutes to learn the scene. When the scene is learned the rendering takes 20ms per frame.

This work is currently in its infancy. Ulyanov told us that it’s still quite “stupid,” in that it can only attempt to reproduce a scene – ie., you can’t alter the scene in any way. And any extreme deviation from the original viewpoint results in artifacts.

But, those of us who remember how brittle Nvidia’s StyleGAN was just a few years ago understand how quickly things can scale in the AI world once you get the basic recipe right.

Today we’re seeing an AI render photorealistic graphics from a video input in real time with a novel viewpoint including perspective-dependent features like reflections and shadows. One day we might be able to slip on a virtual reality headset and take a walk inside of a scene from our favorite films. Imagine training a neural network on your old home movies and then reliving them in VR from any perspective.

We might not be quite there yet, but the writing’s on the wall. Ulyanov says they “actually have a standalone app where we can freely travel in the first-person mode in the scene.”

For more information about neural point-based graphics: read the paper here and check out the project’s Github page here.

Get the TNW newsletter

Get the most important tech news in your inbox each week.