California-based nonprofit artificial intelligence lab OpenAI has cautiously revealed the capabilities of its latest AI, which it’s calling GPT-2. The system can generate surprisingly convincing text to follow any sample you throw at it, like a news article headline, or the opening paragraph of a fictional tale, or a prompt for an essay on a specific topic.

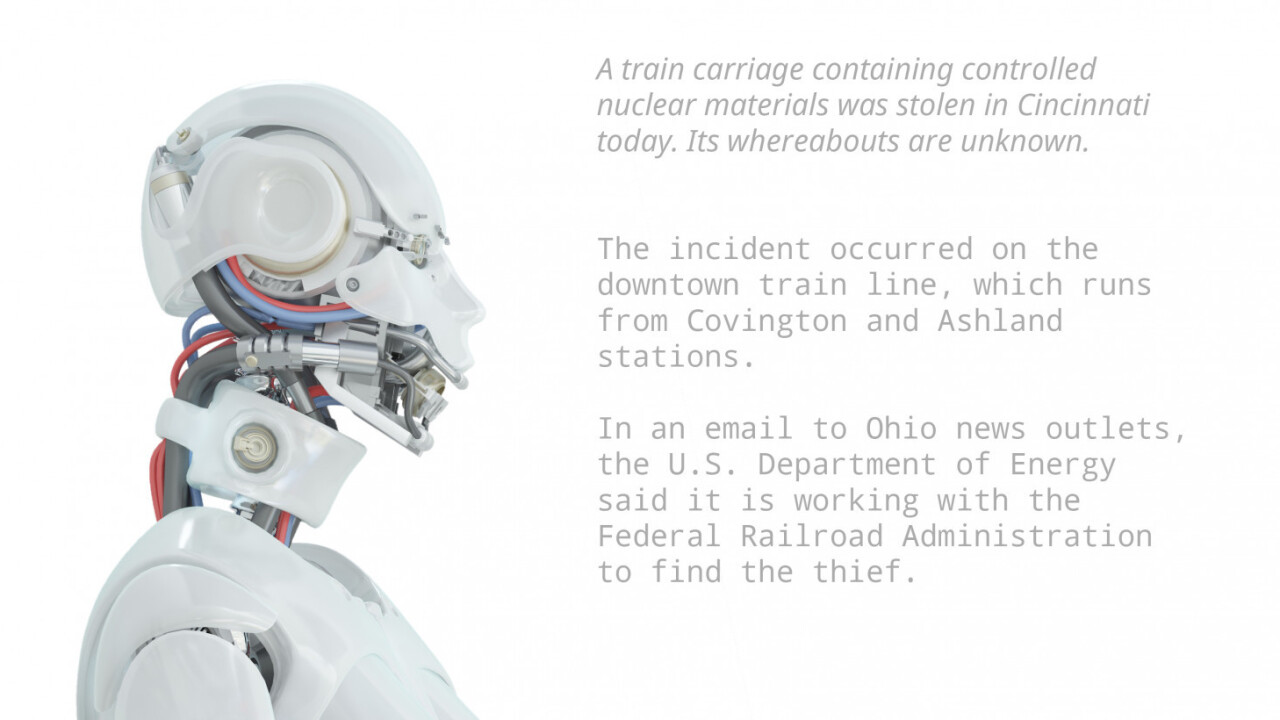

Here are some samples that OpenAI shared to illustrate what it can do:

The organization has made its AI available to a few media outlets to test, and you can see some great and not-so-great examples in coverage from The Verge and The Register.

So, first up – yes, it’s not perfect, and it can sometimes make mistakes, such as repeating itself and losing the plot. OpenAI noted that it can sometimes take a few tries to get a good result; the quality of its output depends on its familiarity with the subject matter in the prompt. It can perform poorly if it hasn’t encountered the content in the prompt before.

However, the organization says it can deliver better results than other AI models trained on specific datasets, like Wikipedia articles – without training on those same datasets itself. This is called zero-shot learning, and achieving high scores on this front is a monumental achievement in AI development, because it suggests that GPT-2 is flexible enough to work competently in a wide range of use cases.

The AI was trained on 40GB of data from the web – the top 8 million upvoted links from Reddit served as the source of this sample data – so it can essentially generate a wide range of output, from seemingly real news articles to legit-sounding fan fiction.

I’m particularly fascinated by this essay above that GPT-2 generated in its attempt to debate against the idea that recycling is good for the world. Try to look past the repeated sentences, and towards the end of the output, in which it points to the issue of generating plenty of waste in the process of manufacturing the products we use. Smart stuff.

OpenAI says this is just the beginning; it doesn’t yet fully understand what GPT-2 is capable of, and what its limitations are. To that end, the researchers working on it will continue to feed GPT-2 more data and observe how it performs. As The Verge noted, its further development could lead to things like better chatbots for healthcare services, and the population of virtual worlds with an infinite number of AI-powered characters that have their own unique backstories.

However, this technology could also be misused to spread disinformation, derail conversations on social networks, and trick people into falling for a variety of online scams. I shudder at the thought of misleading deepfake videos of politicians spouting GPT-2-generated speeches.

To prevent it from falling into the wrong hands right away, OpenAI isn’t sharing the dataset it used to train GPT-2, and it’s only revealing part of the code behind the system for now. That may not stop malicious actors from trying to create similar AIs though.

If this development is anything to go by, the future of AI that can synthesize content that’s indistinguishable from what we produce is bright – and unnervingly dark at the same time.

Get the TNW newsletter

Get the most important tech news in your inbox each week.