DeepMind previously took on the challenge of beating world champions at the Chinese game of Go. It’s also gotten astonishingly good at Chess and Shogi, and has wiped the floor with the best AIs developed for those games.

In August 2017, the Alphabet-owned firm decided to take on a much bigger and more complex challenge: training an AI to master Starcraft II. The immensely popular real-time strategy game sees players take charge of an alien race, gather resources, develop technologies, and pit your army against others in a bid for supremacy in a fictious sci-fi universe.

In a series of matches last month, DeepMind’s AlphaStar AI defeated high-ranking professional StarCraft II players TLO and MaNa; the bot lost only a single live match after its string of wins.

That’s a monumental achievement for the team behind the AI. Unlike turn-based games like Go and Chess, StarCraft II pits players against each other in real-time, and requires them to develop and execute ‘macro’ battle strategies, while also tackling ‘micro’ tasks like mining resources, preparing troops for the next siege and fending off incoming attacks. Add to that the fact that pro players execute hundreds of actions per minute during competitive matches, and you’ve got yourself a serious challenge.

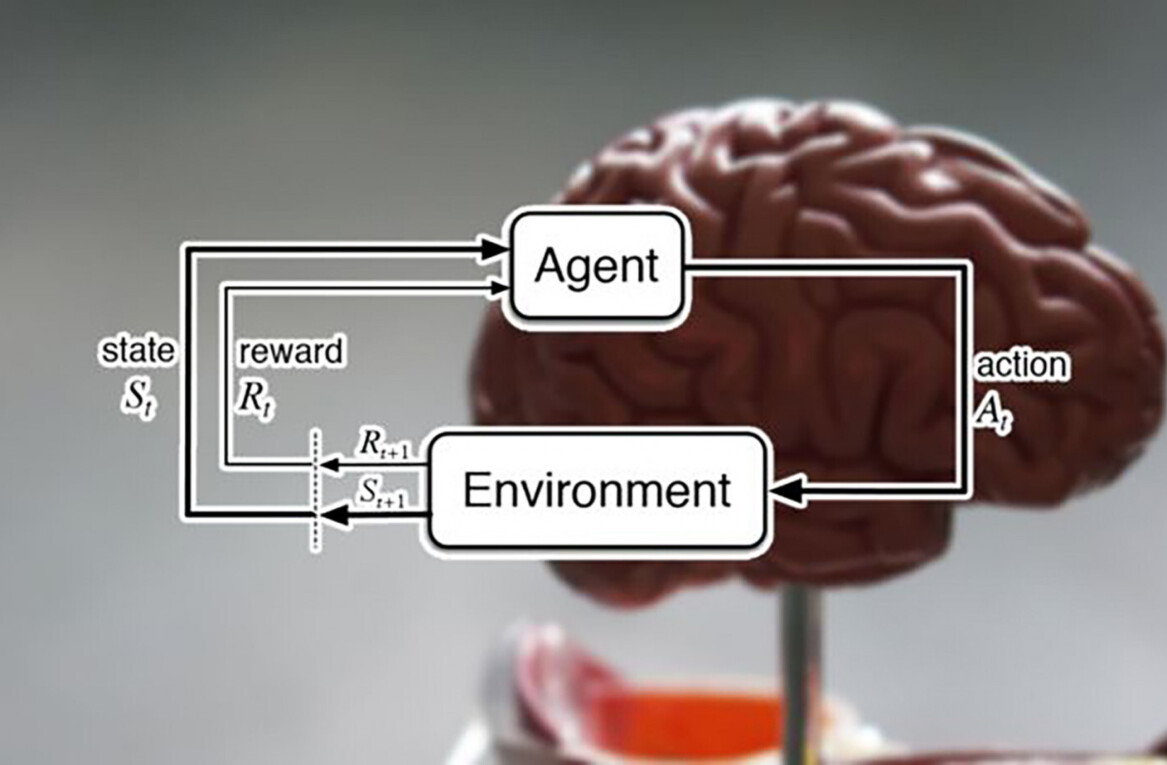

So how do you teach an AI to get better at a complex strategy game than some of the best human players on the planet? DeepMind’s method involved training AlphaStar over roughly 200 years’ worth of gameplay.

The team essentially created a league of AI agents that relied on a neural network trained on data from replays of matches played by humans (each match generally lasts an hour). Over the course of seven days, the agents played against each other, testing strategies and learning from the ones that led to victories.

Five of these agents went up against Germany’s ace player, TLO, in five matches on the Catalyst map; both sides used the Protoss alien race that TLO plays at a Grandmaster level (but he’s even better with the Zerg race). AlphaStar came out on top all five times.

Following that, the AI was trained for an additional week before it competed against MaNa, a Polish player who excels with the Protoss race. AlphaStar won against him five times as well.

It’s worth noting that the AI did have an advantage: unlike human players who have to constantly move their camera around the map to see what’s going on and take action accordingly – whether that’s ordering units to build defenses or commanding troops to attack the enemy – AlphaStar was able to view the entire map area throughout the match. MaNa was able to defeat When an AI agent trained for seven days and forced to use the same camera-led map view as human players, it lost to MaNA.

The experiment showed that it’s possible to train AI to develop strategies effective enough to defeat top-ranked players in something as complex as StarCraft II. But it’s not just about mimicking humans, explained TLO:

AlphaStar takes well-known strategies and turns them on their head. The agent demonstrated strategies I hadn’t thought of before, which means there may still be new ways of playing the game that we haven’t fully explored yet.

His observation is similar to one made by DeepMind’s CEO, Demis Hassabis, who described the company’s AlphaZero AI’s chess playing style as one that’s unlike humans or machines, but rather like an ‘alien.’ That’s perhaps one of the most compelling findings from this exercise: with enough data and room for experimentation, AI could help uncover novel and unexpected solutions to difficult questions.

The team at DeepMind hopes that the advances it’s made in training AI on these tricky challenges could open the door to using artificial intelligence to solve more pressing scientific problems.

Read more about the training methodology over on DeepMind’s site.

Get the TNW newsletter

Get the most important tech news in your inbox each week.