The US Army wants to develop fully autonomous weapons systems, but its initiatives to take humans out of the loop have been met with push-back from lawmakers and the general public. The government’s solution? Treat it as a PR problem.

US Assistant Secretary of the Army for Acquisition, Logistics, and Technology Bruce Jette recently tried to assuage concerns over the military’s integration of AI into combat systems. In doing so, he inadvertently invoked the plot of the 1983 science fiction thriller “War Games.”

In the movie, which stars a young Matthew Broderick, a computer whiz hacks a government system and nearly causes an artificially intelligent computer to start World War III. The hacking part doesn’t necessarily relate to this article, but it’s worth mentioning in the context that our country may not be prepared for a cybersecurity threat to our defense systems.

No, the important bit is how the story plays out – we’ll get back to that in a bit.

Back in the real world here in 2019, Jette spoke to reporters last week during a Defense Writers Group meeting. According to a report from Army Times, he said:

So, here’s one issue that we’re going to run into. People get worried about whether a weapons system has AI controlling the weapon. And there are some constraints about what we’re allowed to do with AI. Here’s your problem: If I can’t get AI involved with being able to properly manage weapons systems and firing sequences, then in the long run I lose the time window.

An example is let’s say you fire a bunch of artillery at me, and I need to fire at them, and you require a man in the loop for every one of those shots. There’s not enough men to put in the loop to get them done fast enough. So, there’s no way to counter those types of shots. So how do we put AI hardware and architecture but do proper policy? Those are some of the wrestling matches we’re dealing with right now.

In essence, Jette’s framing the broad case for the Army’s use of autonomous weaponry as a defense argument. When he describes “artillery,” it’s obvious he’s not talking about mortars and Scud missiles. Our current generation of AI and computer-based solutions work quite well against the current generation of artillery.

Instead, it’s apparent he’s referring to other kinds of “artillery” such as drone swarms, UAVs, and similar unmanned ordnance-related threats. The idea here is that enemies such as Russia and China might not hesitate to deploy killer drones, so we need powerful AI to serve as a defense mechanism.

But this isn’t a new scenario. The specific systems in place to defend against any kind of modern ordnance – from ICBMs equipped with nuclear warheads to self-destructing drones — have always relied on computers and machine learning.

The US Navy, for example, started using the Phalanx CIWS automated counter-measure system aboard its ships in the late 1970s. This system, still in use on many vessels today, evolved over the years but its purpose remains unchanged: it’s meant to be the last line of defense for incoming ballistic threats to ships at sea.

The point being: the US public (generally speaking) and most lawmakers have never had a problem with automated defense systems. It seems disingenuous for Army leadership to paint the current issue as a problem with using AI to defend against artillery. It’s not.

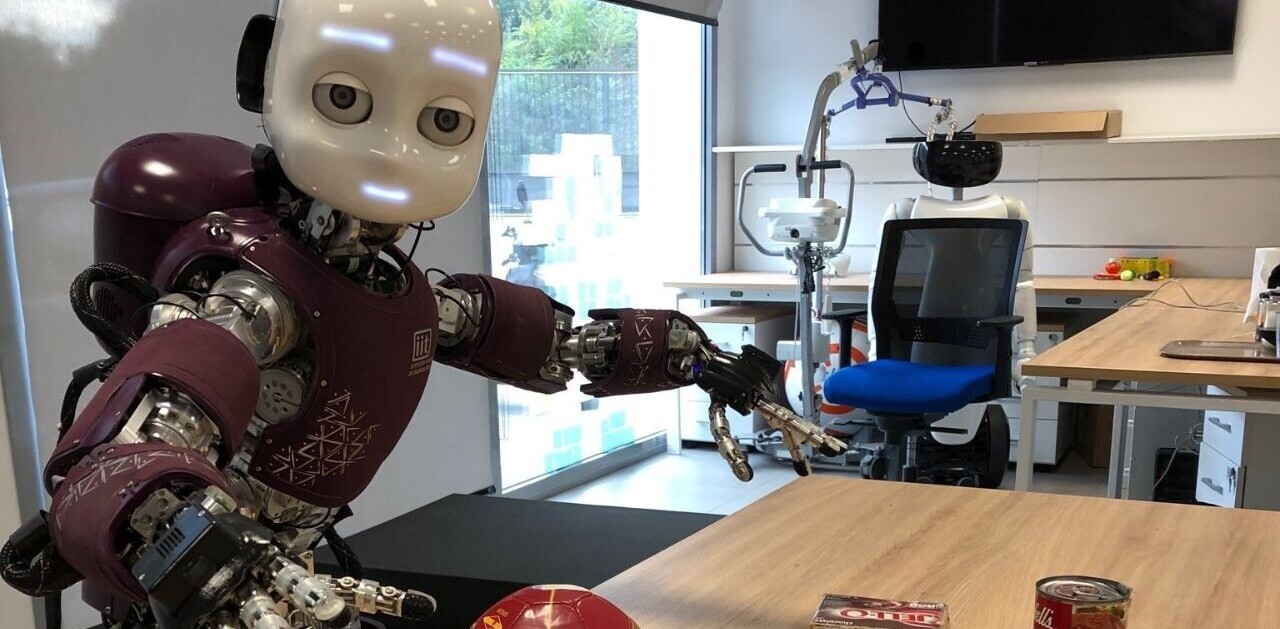

The various groups, experts, and concerned journalists raising the red flag on autonomous weaponry are talking about machines developed with the ability to “choose” to kill a person without any human input.

In the movie “War Games” the US government runs a series of scenarios designed to determine the nation’s readiness for a nuclear war. When it’s determined that the humans designated with “pushing the button” and firing a nuclear missile at another country are likely to refuse, the military decides to pursue an artificial intelligence option.

Robots don’t have a problem wiping out entire civilian populations as a response to the wars their leaders choose to engage in.

Luckily for the fictional denizens of the world in the movie, Matthew Broderick saves the day. He forces the computer to run simulations until it discovers “mutually assured destruction.” This is a military protocol where certain events will trigger a response resulting in the obliteration of both the aggressor and the defender (as in, their entire countries). In doing so, the computer realizes that the only way to win is not to play.

The US Army’s attempts to convince the general public that it needs AI capable of killing people as a defense measure against artillery is specious at best. It doesn’t. We need AI counter-measures, and it’s a matter of national security that our military continues to develop and deploy cutting-edge technology, including advanced machine learning.

But developing machines that kill humans without a human’s intervention shouldn’t be massaged into that conversation like it’s all the same thing. There’s a distinct and easy-to-understand ethical difference between autonomous systems that shoot down ordnance, unmanned machines, and other artillery and those designed to kill people or destroy manned vehicles.

Matthew Broderick probably won’t be able to save us with his fabulous acting and charming delivery when we get stuck in a real murder-loop with problem solving robots aiming to end a conflict by any means necessary.

We reached out to the Army public affairs office for more information but didn’t receive an immediate response.

Get the TNW newsletter

Get the most important tech news in your inbox each week.