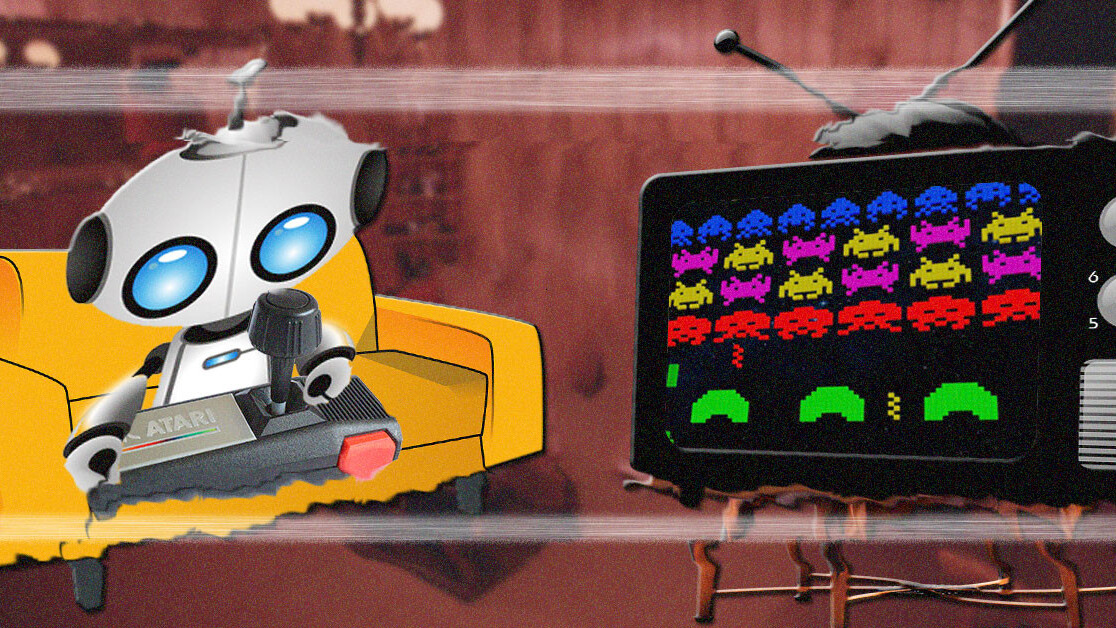

If you teach a robot to fish, it’ll probably catch fish. However, if you teach it to be curious, it’ll just watch TV and play video games all day.

Researchers from Open AI — the singularity-focused think-tank co-founded by Elon Musk — recently published a research paper detailing a large-scale study on curiosity-driven learning. In it, they show how AI models trained without “extrinsic rewards” can develop and learn skills.

Basically, they’ve figured out how to get AI to do stuff without explicitly telling it what its goals are. According to the team’s white paper:

This is not as strange as it sounds. Developmental psychologists talk about intrinsic motivation (i.e., curiosity) as the primary driver in the early stages of development: Babies appear to employ goal-less exploration to learn skills that will be useful later on in life. There are plenty of other examples, from playing Minecraft to visiting your local zoo, where no extrinsic rewards are required.

The idea here is that if we can get machines to explore environments without human-coded rewards built in, we’ll be that much closer to truly autonomous machines. This could have incredible implications for things such as the development of rescue robots, or exploring space.

To study the effects of intrinsically-motivated deep learning, the researchers turned to video games. These environments are perfectly suited for AI research due to their inherent rules and rewards. Developers can tell AI to play, for example, Pong, and give it specific conditions like “don’t lose,” which would drive it to prioritize scoring points (theoretically).

When the researchers conducted experiments in the Atari dataset, Super Mario Bros., and Pong environments they found that agents without goals were capable of developing skills and learning, though sometimes the results got a bit… interesting.

The curiosity-driven agent kind of sets its own rules. It’s motivated to experience new things. So, for example when it plays Breakout – the classic brick-breaking game – it performs well because it doesn’t want to get bored:

The more times the bricks are struck in a row by the ball, the more complicated the pattern of bricks remaining becomes, making the agent more curious to explore further, hence, collecting points as a bi-product. Further, when the agent runs out of lives, the bricks are reset to a uniform structure again that has been seen by the agent many times before and is hence very predictable, so the agent tries to stay alive to be curious by avoiding reset by death.

The AI passed 11 levels of Super Mario Bros., just out of sheer curiosity, indicating that with enough goal-free training sessions an AI can perform quite exceptionally.

It’s not all good in the artificially intelligent neighborhood however – curious machines suffer from the same kind of problems that curious people do: They’re easily distracted. When researchers pitted two curious Pong-playing bots against one another they forewent the match and decided to see how many volleys they could achieve together.

The research team also tested out a common thought-experiment called the “Noisy TV Problem.” According to the team’s white paper:

The idea is that local sources of entropy in an environment like a TV that randomly changes channels when an action is taken should prove to be an irresistible attraction to our agent. We take this thought experiment literally and add a TV to the maze along with an action to change the channel.

It turns out they were right, there was a significant dip in performance when the AI tried to run a maze and found a virtual TV.

These curious machine learning agents seem to be the most human-like AI we’ve come across yet. What’s that say about us?

H/t: Quartz

Get the TNW newsletter

Get the most important tech news in your inbox each week.