Google has published a brand new AI experiment called Move Mirror, which matches your pose with a catalogue of 80,000 photos. Useless? Kinda, but it’s also pretty bloody cool.

Here’s how it works: when you visit the Move Mirror website and grant it access to your computer’s webcam, it maps the positioning of your joints using a computer vision model called PoseNet. It then contrasts that against a massive library of 80,000 photographs, and returns the one that most closely matches your pose.

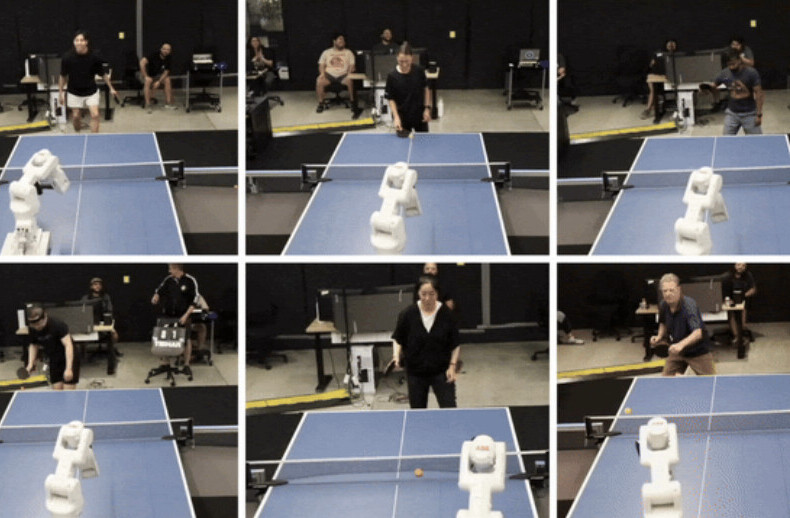

These are updated in real-time, meaning, as your body’s positioning changes, so too does the match returned by Google’s quirky little experiment. You can even make a GIF of your matches, for later social sharing. If you’re curious, this is what it looks like:

The secret sauce powering Move Mirror is a potent JavaScript library called TensorFlow.js, which runs within the browser. From the all-important privacy side, that means you’re not providing Google with footage from your webcam, as the computational heavy lifting happens on your own computer.

It’s also pretty cool to see how commoditized computer vision technology is. Not only can we make sophisticated AI-powered websites sites like this for a joke (or, perhaps more fairly, as a technical demonstration), but it can run on the end-user’s computer — without the need to spend thousands on renting some high-end server iron.

You can check out Move Mirror here, and if you’re especially curious, you can read Google’s blog post, where it explains the technical details behind the project in more detail. If you’re really curious, there’s an even deeper dive on the TensorFlow Medium that’s definitely worth a read.

Get the TNW newsletter

Get the most important tech news in your inbox each week.