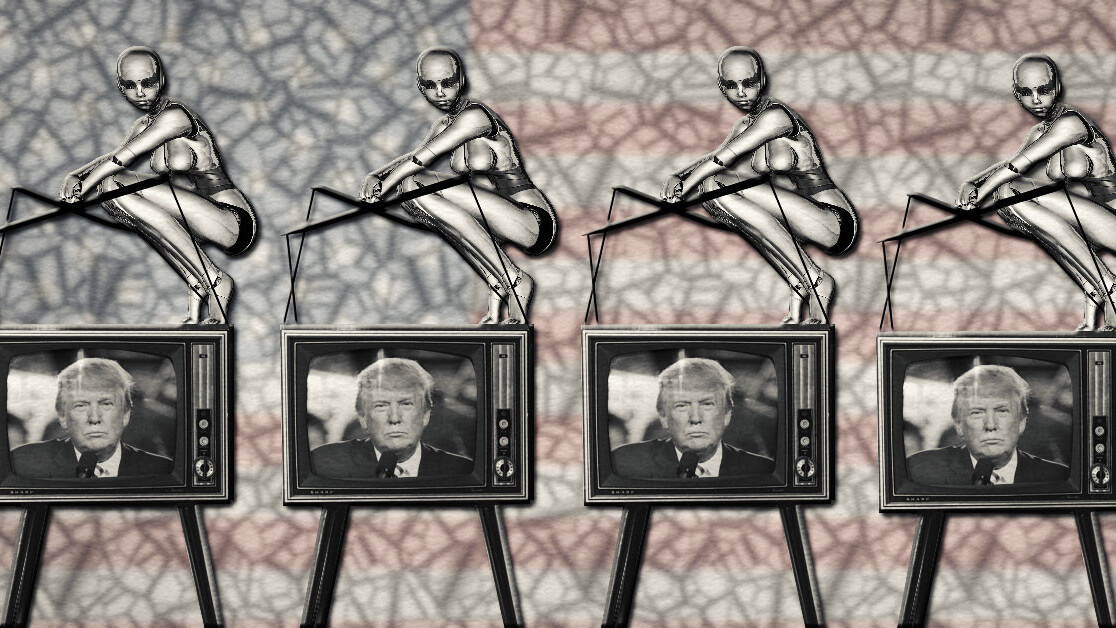

Fake AI-generated videos featuring political figures could be all the rage during the next election cycle, and that’s bad news for democracy.

A recently released study indicates that DeepFakes, a neural network that creates fake videos of real people, represents one of the largest threats posed by artificial intelligence.

The study’s authors state:

AI systems are capable of generating realistic-sounding synthetic voice recordings of any individual for whom there is a sufficiently large voice training dataset. The same is increasingly true for video. As of this writing, “deep fake” forged audio and video looks and sounds noticeably wrong even to untrained individuals. However, at the pace these technologies are making progress, they are likely less than five years away from being able to fool the untrained ear and eye.

In case you missed it, DeepFakes was thrust into the spotlight last year when videos created by it began showing up on social media and pornography websites.

The manipulation of video, images, and sound isn’t exactly new – nearly a decade ago we watched as Jeff Bridges graced the screen of “Tron Legacy” appearing exactly as he did 35 years ago when he starred in the original.

What’s changed? It’s ridiculously easy to use DeepFakes because, essentially, all of the hard work is done by the AI. It requires no video editing skills and minimal knowledge of AI to use — most DeepFakes apps are built with Google’s open-source AI platform TensorFlow. Just about anyone can set up and train a DeepFakes neural network to produce a semi-convincing fake video.

This is part of the reason why, when DeepFakes hit the public periphery last year, it was met with a mixture of excitement and fear — and revulsion once people started exploiting female celebrities with it.

If you haven’t seen the video where President Obama insults President Trump (except, of course, he didn’t, it’s fake), then you really should take a moment to watch it, if only to gain some perspective.

Most people watching the above will assume it’s fake; not only is the content incredulous, but the picture is littered with artifacts. DeepFakes isn’t perfect by any means, but it’s doesn’t have to be. If a team of humans were trying to create these fake videos they’d likely have to spend hours upon hours painstakingly editing them frame by frame. But, with even a modest hardware setup, a bad actor can spit out DeepFakes videos in minutes. When it comes to successfully spreading propaganda, quantity wins out over quality.

Forensic technology expert Hany Farid, of Dartmouth College, told AP News:

I expect that here in the United States we will start to see this content in the upcoming midterms and national election two years from now. The technology, of course, knows no borders, so I expect the impact to ripple around the globe.

Even if the videos aren’t that great – and trust us, they’ll get better – they could still trick enough people into believing just about anything. It’s not difficult to imagine bad actors using AI to fake videos of politicians or, perhaps even more likely, their supporters engaged in behavior that supports a divisive narrative.

The US government is working on a fake video detector, as are private-sector researchers around the globe. But, there’s never going to be an ubiquitous system to protect the entire population from seeing fake videos. And that means everyone needs to remain vigilant because propaganda doesn’t have to convince anyone, it just has to make a few people doubt the truth.

For more information on neural networks check out our guide here. And don’t forget to visit out artificial intelligence section to stay up to date on our future robot overlords.

Get the TNW newsletter

Get the most important tech news in your inbox each week.