Google’s UK-based sister company, DeepMind, recently developed an AI that can render entire scenes in 3D after having only observed them as flat 2D images.

Broad strokes: AI researchers at the cutting-edge are trying to teach machines to learn like humans. Rather than see the world in pixels, we look around our environment and make assumptions about everything in it. If we can see someone’s chest, we assume they also have a back, even though it may not be visible from our perspective.

If you play peek-a-boo with a baby they learn that your face still exists even if you cover it with your hand. And that’s basically what DeepMind’s team did with their machines: they trained an AI how to guess what things look like from angles it hasn’t seen.

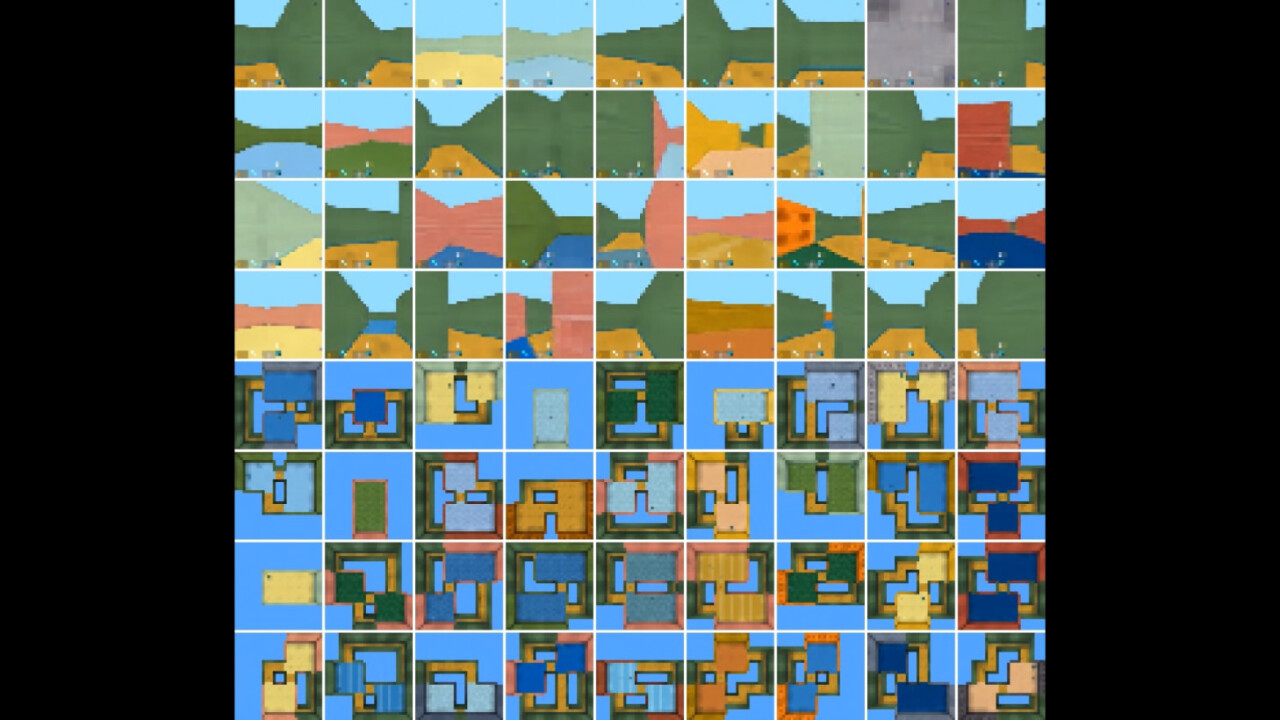

The details: DeepMind’s scientists came up with a Generative Query Network (GQN), a neural network designed to teach AI how to imagine what a scene of objects would look like from a different perspective. Basically, the AI observes flat 2D pictures of a scene and then tries to recreate it. What’s significant, in this case, is that DeepMind’s AI doesn’t use any human-labelled input or previous knowledge. It observes as little as three images and goes to work predicting what a 3D version of the scene would look like.

Think of it like taking a photo of a cube and asking an AI to render the same picture from a different angle. Things like lighting and shadows would change, as well as the direction of the lines making up the cube. AI — using the GQN — has to imagine what the cube would look like from angles it’s never actually observed it from, in order to render the requested image.

The impact: The researchers are working towards “fully unsupervised scene understanding.” Currently the AI hasn’t been trained with real-world images, so it follows that the next step would be rendering realistic scenes from photographs.

It’s possible, in the future, that DeepMind’s GQN-based AI could generate on-demand 3D scenes that are nearly identical to the real world, using nothing but photographs.

Get the TNW newsletter

Get the most important tech news in your inbox each week.