If you thought DeepFakes, the AI that swaps celebrity faces into any video (like porn), was scary wait until you see what Facebook’s DensePose can do.

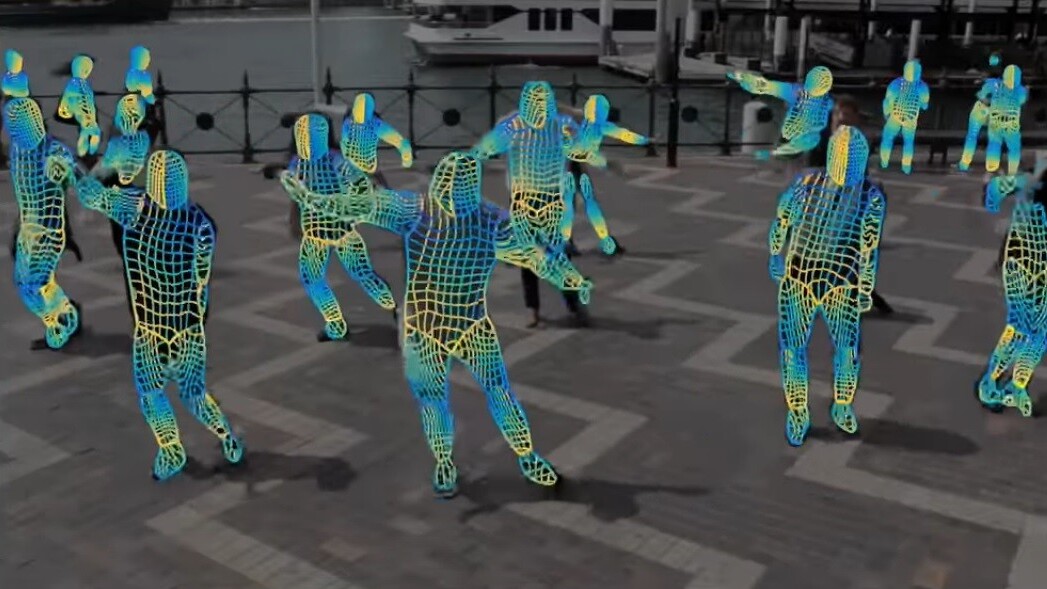

Facebook’s AI research (FAIR) division last week revealed the details of a neural network that maps 2D images to humans in videos. Basically the team taught AI how to add “skins” to people in videos – in real-time.

If you’ve ever wanted to live in a world where, at the push of a button, you could turn all the people in any video into a Wookie (for example) this is fabulous news for you.

While there have been other 2D image-mapping neural networks, this one is the first to put it all together in real-time and effectively “connect the dots” without a depth sensor. It was created by FAIR researchers Natalia Neverova, and Iasonas Kokkinos with INRIA researcher Rıza Alp Güler.

It uses a convolutional neural network that was built by first creating a human-annotated data set and then training a “teacher” AI. In total, 50,000 images of human body parts were scrutinized by humans who then annotated more than 5 million data points which provided the training data for the network.

Once the system understood how humans see other humans, it was ready to train its “learner” how to see people the same way.

The end result is an AI that uses a 2D RGB image as input and applies it to any number of humans in a video. Instead of putting a celebrity’s face on someone else’s body, you could change the way people look in a video as if editing a Minecraft skin.

Of course there are applications for this that go far beyond turning celebrities and movie characters into nudes or Wookies.

An AI with the ability to isolate multiple humans in a video and apply targeted 2D image maps could be extraordinarily useful to law enforcement. It could, potentially, be adapted to extract all the humans from footage of a crowd and create a searchable index by, for example, body language or suspicious movement patterns.

According to the team’s white paper, the AI also has its sights set on the gaming industry. With some more work and optimization, it could one day be a substitute for character modeling in video games. And it could be the key to creating realistic augmented reality characters for people to interact with in real-time.

And DensePose is a gamer at heart: it runs on a GTX 1080 GPU. But it’s not quite ready to fool the human eye as, according to the developers, it currently “operates at 20-26 frames per second for a 240 × 320 image or 4-5 frames per second for a 800 × 1100 image.”

Still, it all adds up to a world where Meryl Streep and Nicholas Cage’s jobs could soon be just as at-risk as the cashiers’ at McDonalds.

Get the TNW newsletter

Get the most important tech news in your inbox each week.