A group of scientists recently developed an AI model which uses Google Street View photographs to determine startlingly accurate social insights about a geographic area. By looking at the cars we drive, the researchers’ deep learning network can determine a community’s racial, political, and economic makeup.

The research was conducted by scientists and based at Stanford university, using an AI training method called a convolutional neural network (CNN). This method involves creating a “gold standard” set of images, checked by humans, which are used to teach a computer how to classify new images on its own. In this case the machine was taught to look for vehicles and separate images of cars and trucks into 2,657 fine-grained categories.

Over a two week period the AI processed 50 million images from more than 200 cities in the US. The research resulted in the classification of more than 22 million individual vehicles.

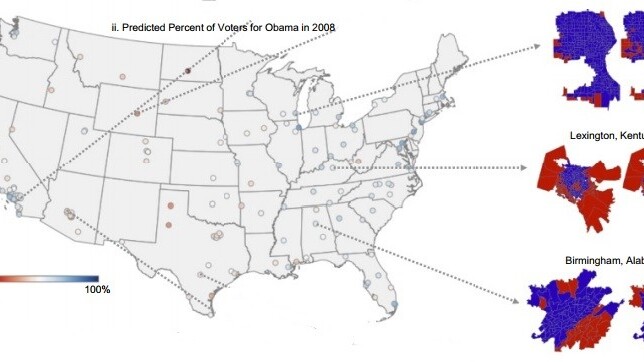

The results were used to find the racial makeup, political tendencies, and other census-style demographics of individual areas by zip-code or precinct. In order to determine the level of accuracy the system had, the scientists compared their results with those gained from the American Community Survey (ACS).

Perhaps filed in a category labeled “yeah, we could have guessed as much” (but probably not) would be the political insights gained by the system. The researchers, in the project’s white paper, say:

We found that by driving through a city while counting sedans and pickup trucks, it is possible to reliably determine whether the city voted Democratic or Republican: If there are more sedans, it probably voted Democrat (88% chance), and if there are more pickup trucks, it probably voted Republican (82% chance).

While the researchers’ CNN-powered model certainly isn’t a replacement for an actual census – at-a-glance insight isn’t exactly the most scientifically sound way of determining information – it offers a glimpse at how AI could provide manageable data for more than just advertisers to benefit from.

Being able to couple the power of machine learning-based image recognition with databases of verified information is one of the next important challenges for AI. Up to now developers have taught computers how to see the world in a way similar to us, but the machines are still pretty stupid when it comes to understanding what they’re looking at.

Humans are, of course, much better at looking at images and gaining general insight — we could see a bird, a tire, a crowbar, and a basketball in the same picture, where this AI is only looking for cars.

In this case, however, the AI is actually a little better at understanding the minor nuances between specific vehicle makes and models in an image than an average person would likely be. Most of us couldn’t name every car on the road since 1990 by year.

There’s also the fact that it completes its work in a couple of weeks, whereas the same task would have taken humans approximately 15 years.

It’s becoming obvious that we’re inching ever closer to the day where AI actually becomes better at seeing the world than humans are. Hopefully when that happens the computers will do more than make it easier to see our differences.

Get the TNW newsletter

Get the most important tech news in your inbox each week.