Silver Logic Labs (SLL) is in the people business. Technically, it’s an AI startup, but what it really does is figure out what people want. At first glance they’ve simply found a better way to do focus-groups, but after talking to CEO Jerimiah Hamon we’ve learned there’s nothing simple about the work he’s doing.

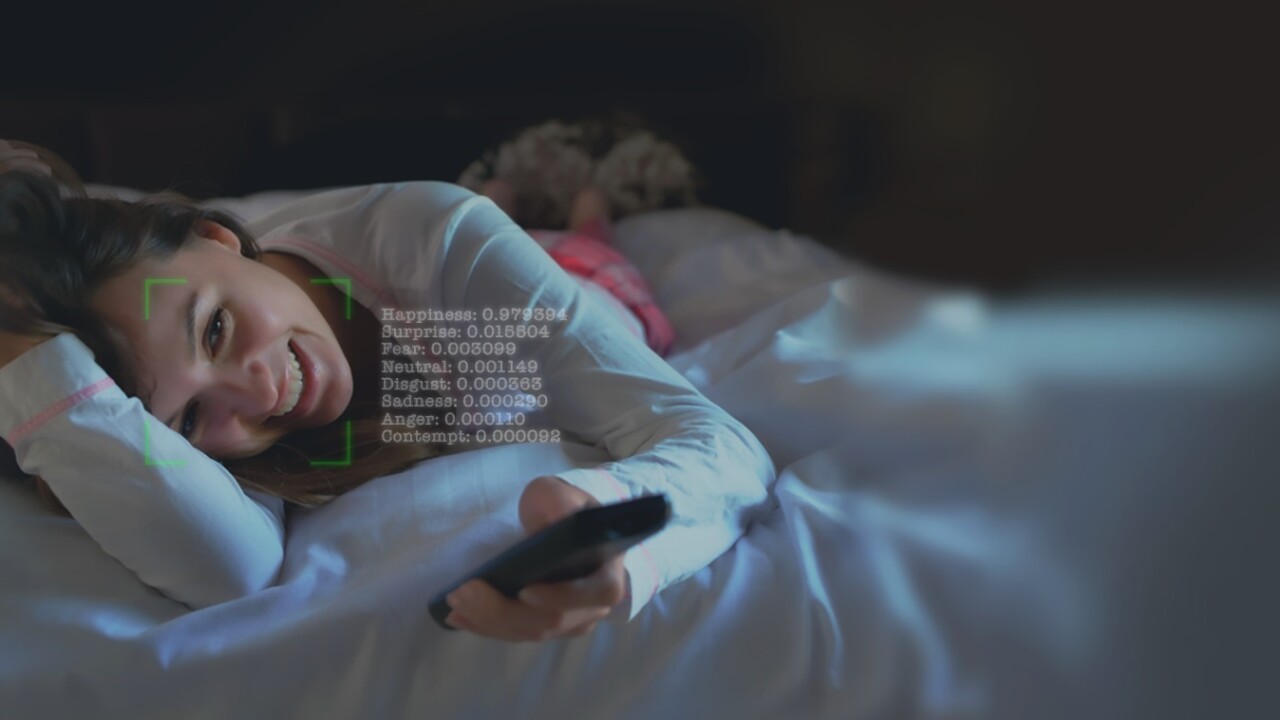

The majority of AI in the world is being taught to do boring stuff. The machines are learning to analyze data, and scrape websites. They’re being forced to to sew shirts and watch us sleep. Hamon and his team created an algorithm that analyzes the tiniest of human movements, using a camera, and determines what that person is feeling.

Don’t worry if your mind isn’t blown right now – it takes a little explanation to sink in. Imagine you’re trying to determine whether a TV show will be popular with an audience and you’ve gathered a group of test-viewers who’ve just seen your show. How do you know if they’re responding honestly, or simply trying to respond in the way they think they should. Hamon told us:

You have these situations where you’re trying to determine how people feel about something that could possibly be considered controversial, or that people might not want to be honest about. You might have a scene with two men kissing each other, or two women. You might have a scene where a dog gets hit by a car in such a way that it’s supposed to be funny.

We’ll find, sometimes, people will respond that they didn’t like those things, but then when we analyze what they were doing while they were watching it, and we pick up these details and we see they’re expressing joy, or arousal, quite often.

And we’re better at predicting whether that show is going to do well based on our insight, than if you just go by how people respond to the list of questions.

SLL is trying to solve one of the oldest problems in the world: people lie. In fact, according to the fictional Dr. House, M.D. “Everybody lies.” More importantly though, Hamon – who is not-at-all fictional – told us:

With our system we find that we get a lot more data. We can use it to watch every second and compare every second to every other second in a way a person watching can’t. So when asked “Can you predict a Nielsen rating?” the answer is yes, the lowest accuracy rating we’ve got is about 89% — that’s the lowest.

Being able to determine the viability of a TV show, or how people feel about a specific scene in a movie is a pretty neat trick. The fact that they’ve adapted the technology to work with almost any laptop camera – for survey purposes such as observing someone watching a video clip at home – is astounding.

Hamon told us that the algorithms work so well his team almost always ends up flunking certain respondents for being under the influence of a substance. A drug detecting robot that can be employed through any connected camera? That’s a little spooky.

SLL does more than provide analytics for TV shows and movies, in fact its ambitions might be some of the highest we’ve ever seen for an AI company. We asked Hamon how this technology was supposed to be used outside of simply detecting if someone liked something or not:

I’m very passionate about health care. With this we can identify neural-deficits very quickly. We did a lot of research and it turns out it’s proven that if you’re going to have a stroke, you will have a series of micro-strokes first. These are undetectable most of the time, sometimes even to the people having them – if you live at a nursing home for instance, we could have cameras set up, we could detect those.

These people might have a one percent change in gait, we could see that for example, our system might be able to detect the first of the micro-strokes and signal for help.

The company also wants to change the way law enforcement works. Hamon believes that dash-cams and body-cams that utilize this technology will save lives. He proposed a what-if scenario:

Say you’ve got someone running up to a building and there’s someone on guard, they might see this person running – who, incidentally, has just lost their baby and needs help – as a threat, maybe due to a lack of training or because they’re scared.

The other side is maybe you see someone running and think they need your help when in reality they have a pound of explosives in their backpack. We know that how a person moves is different based on how they feel. People make these decisions under extreme pressure and they’re not always right.

There are even more uses for educational applications. The potential to determine exactly how students respond to a teacher, or to tailor a specific lesson to an individual could help a lot of people, especially those who aren’t benefiting from traditional methods.

It’s about time someone created an AI that helps us better understand each other in a practical sense that might actually save lives.

Get the TNW newsletter

Get the most important tech news in your inbox each week.