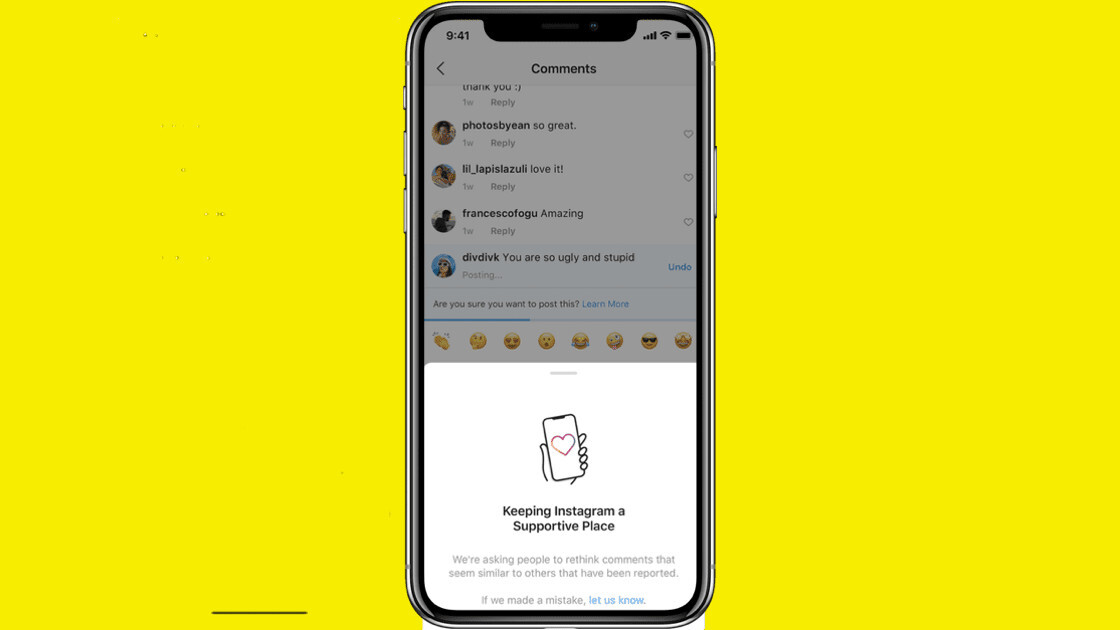

Instagram today released two new features with a view to curb bullying on the platform: a warning when you try to post an abusive comment, and a ‘Restrict’ function to limit another person’s interaction with you.

Adam Mosseri, Head of Instagram, said the AI-powered warning feature has stopped some people from posting foulmouthed comments during the early testing period:

In the last few days, we started rolling out a new feature powered by AI that notifies people when their comment may be considered offensive before it’s posted. This intervention gives people a chance to reflect and undo their comment and prevents the recipient from receiving the harmful comment notification.

Often, people use different tricks like using symbols or alternative spellings to fool the AI and post abusive comments. Instagram hasn’t shared any details as to what it’s doing to curb that, and hasn’t specified if the feature is available for languages other than English. We’ve asked the company for more information, and we’ll update the post accordingly.

The social network is also testing another feature called ‘Restrict,’ which will allow you to limit a person’s interaction with you. If you restrict a person, they will still be able to post comments on your posts, but they won’t be visible to anyone but themselves. You can then review and allow the restricted person’s comment to be visible to others specifically.

Instagram said often people don’t block, unfollow, or report their bullies, because “it could escalate the situation.” The restricted person won’t be able to see when you’re active on the platform, or when you’ve read their direct messages.

In April, the platform started demoting offensive posts as a measure to curb hate speech. We’ll have to wait and see if these features are effective, and if the social network’s AI is strong enough to detect tricky abusive comments.

Get the TNW newsletter

Get the most important tech news in your inbox each week.