During the latest edition of its annual WWDC event, Apple made important strides to show developers and creators that it is finally getting serious about artificial intelligence.

The company announced its all-new Core ML framework specifically designed to enable developers to build smarter apps by embedding them with on-device machine learning capabilities. But it seems the new system still has some learning to do.

Toying around with the Core ML beta, developer Paul Haddad took to Twitter to showcase how well the framework handles computer vision tasks.

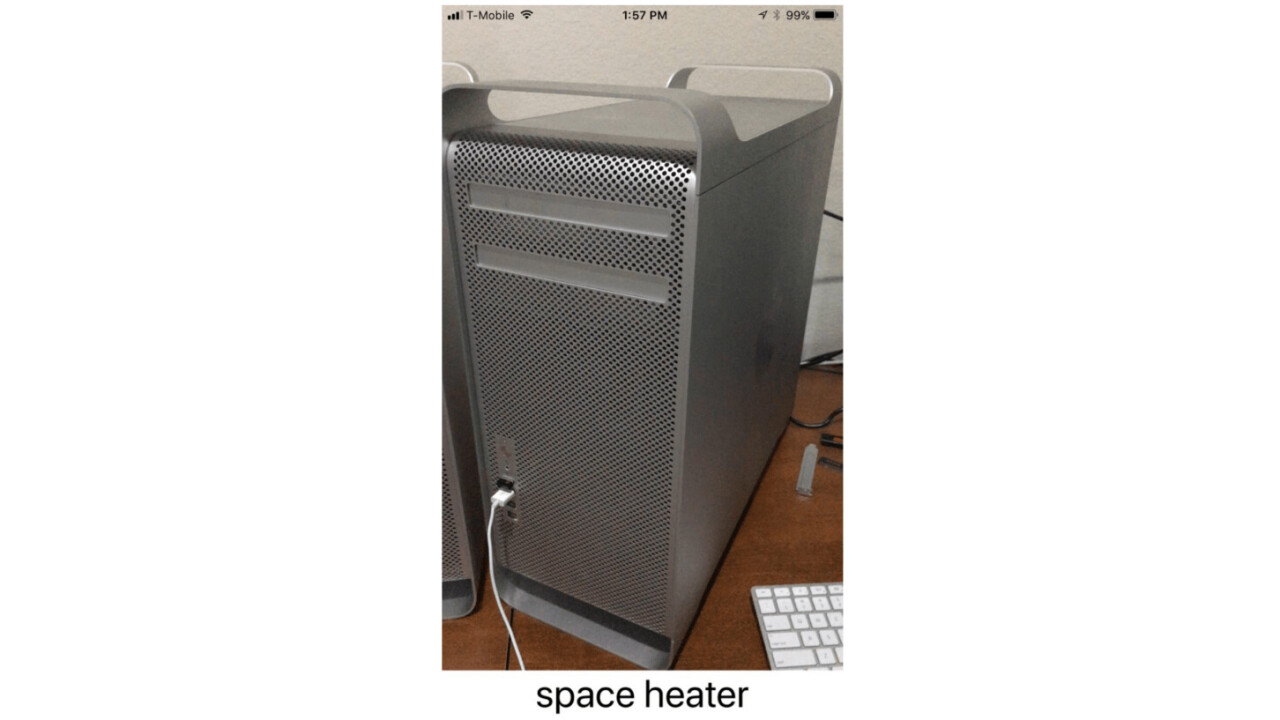

Using the new built-in screen recording tool, Haddad tested the capacity of Core ML to identify and caption objects in real-time.

Holy crap. CoreML is really smart. pic.twitter.com/3n7rTtK176

— Paul Haddad (@tapbot_paul) June 8, 2017

I wasn’t joking either just got to get it at the right angle. This also really heats up this phone. pic.twitter.com/dvOEXdHHTn

— Paul Haddad (@tapbot_paul) June 8, 2017

While the app appears to accurately recognize certain objects – like a screwdriver, a keyboard and some boxes – it paradoxically struggled to caption the first-generation Mac Pro, misidentifying the desktop system as either a “speaker unit” or a “space heater.”

Despite little inconsistencies, Haddad expressed enthusiasm about the framework’s potential, noting that users need to point their cameras “at the right angle” for optimal results. He also remarked that Core ML seemed to considerably heat up the device when using the app.

Given that apps relying on machine learning algorithms to caption objects in the real world misidentify their targets all the time, it is hardly surprising Apple’s framework is getting stuff wrong here and there – it is after all still in beta.

However, chances are the software will continue to improve once Apple rolls out the official release later in autumn and more people start experimenting with Core ML.

Here are some more examples of what you can do with the new framework:

I made a demo app with Core ML and Unsplash API that predicts what is in the photo ? I can say Core ML is awesome ?? #WWDC17 #swift #iOS11 pic.twitter.com/58XNjKeEGo

— ahmet (@theswiftist) June 7, 2017

CoreML has been fun to play with. I made a small guide on using it https://t.co/g9UEl1EjHw… https://t.co/pItC5JpL1e pic.twitter.com/2S86O1P665

— Austin Tooley (@AustinTooley) June 9, 2017

Meanwhile, you can catch up with everything the Big A announced during WWDC by clicking here.

[H/T Paul Haddad]

Get the TNW newsletter

Get the most important tech news in your inbox each week.