Putting pictures on the Web may have many potential uses, be it for personal purposes or for the greater good of the online masses. But tagging said images with useful information for searching purposes can be a lengthy process, especially if you have thousands or millions of photos.

With that in mind, Google has revealed a new captioning system that recognizes the content of photos and automatically tags them with descriptions using natural language.

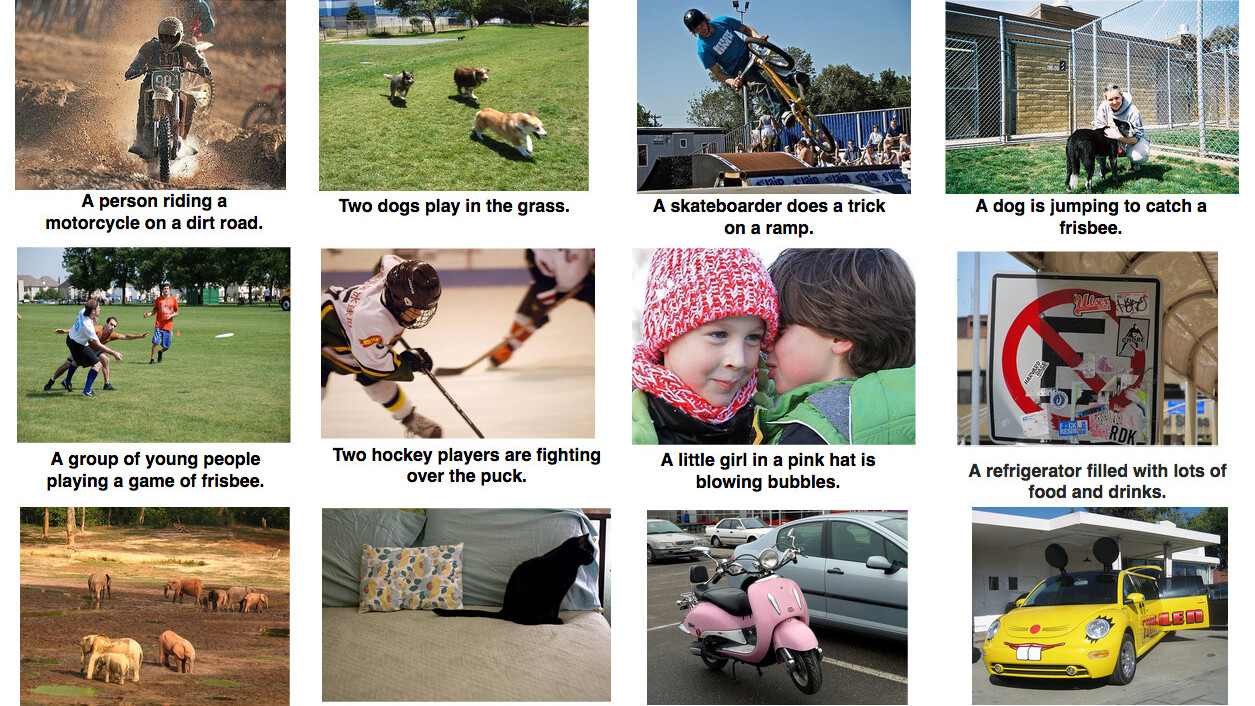

Though there are many examples of intelligent computer vision software that can auto-tag images, this takes things a step further by enabling full descriptions. This could be ‘two dogs play in the grass’, or ‘a little girl in a pink hat is blowing bubbles’.

As you can see from these snapshots, it’s still not entirely accurate all the time, but the fact that this is even close to being realized with even a degree of accuracy, is pretty exciting.

While it’s still an early-stage research project, this holds significant promise for the future of artificial intelligence and machine-learning.

“This kind of system could eventually help visually impaired people understand pictures, provide alternate text for images in parts of the world where mobile connections are slow, and make it easier for everyone to search on Google for images,” the company says in a blog post.

You can read a more detailed summary of the technology here, or click on the link below to peruse the full paper.

➤ Show and Tell: A Neural Image Caption Generator [Cornell University Library]

Get the TNW newsletter

Get the most important tech news in your inbox each week.