A team of researchers recently developed an algorithm that generates original reviews for wines and beers. Considering that computers can’t taste booze, this makes for a curious use-case for machine learning.

The AI sommelier was trained on a database containing hundreds of thousands of beer and wine reviews. In essence, it aggregates those reviews and picks out keywords. When the researchers ask it to generate its own review for a specific wine or beer, it generates something similar to previous reviews.

According to the researchers, its output is comparable to, and often indistinguishable from, reviews created purely by humans.

The big question here is: who is this for?

The research team says it’s for people who can’t afford professional reviewers, lack the inspiration to start a proper review, or just want a summary of what’s been said about a beverage before.

Lol, what?

Per their research paper:

Rather than replacing the human review writer, we envision a workflow wherein machines take the metadata as inputs and generate a human readable review as a first draft of the review and thereby assist an expert reviewer in writing their review.

We next modify and apply our machine-writing technology to show how machines can be used to write a synthesis of a set of product reviews.

For this last application we work in the context of beer reviews (for which there is a large set of available reviews for each of a large number of products) and produce machine-written review syntheses that do a good job – measured again through human evaluation – of capturing the ideas expressed in the reviews of any given beer.

That’s all well and fine, but it’s hard to imagine any of these fictional people actually exist.

Are there really people so privileged they can afford their own vintner or brewery, who are somehow also isolated from social media influencers and the huge world of wine and beer aficionados?

This seems like it would be incredibly useful as a marketing scheme but, again, it’s hard to imagine there are people who exist that are reticent to try a particular wine or beer until they can read what an AI thinks about it.

Will the people who benefit from using this AI be transparent with the people consuming the content it creates?

What’s in a review?

When it comes to a human reviewer’s individual tastes, we can look at their body of work and see if we tend to agree with their sentiments.

With an AI, we’re merely seeing whatever its operator cherry-picks. It’s the same with any content-generation scheme.

The most famous AI for content-generation is OpenAI’s GPT-3. It’s widely considered one of the most advanced AI networks in existence and is oft cited as the industry state-of-the-art for text generation. Yet even GPT-3 requires a heavy hand when it comes to output moderation and curation.

Suffice to say, it’s probably a safe bet that the AI sommelier team’s models don’t outperform GPT-3 and, thus, require at least a similar level of human attention.

This begs the question: how ethical is it to generate content without crediting the machine?

There’s no feasible world wherein a sentiment such as “AI says our wine tastes great” should be a selling point (outside of the kind of hyperbolic technology events that are usually sponsored by energy drinks and cryptocurrency orgs).

And that means the most likely use-cases for an AI sommelier would probably involve tacitly allowing people to think its outputs were generated by something that could actually taste what it’s talking about.

Is that ethical?

That’s not a question we can answer without applying intellectual rigor to a specific example of its application.

The existence of AI sommeliers, GPT-3, neural networks that create paintings, and AI-powered music generators has created a potential ethical nightmare.

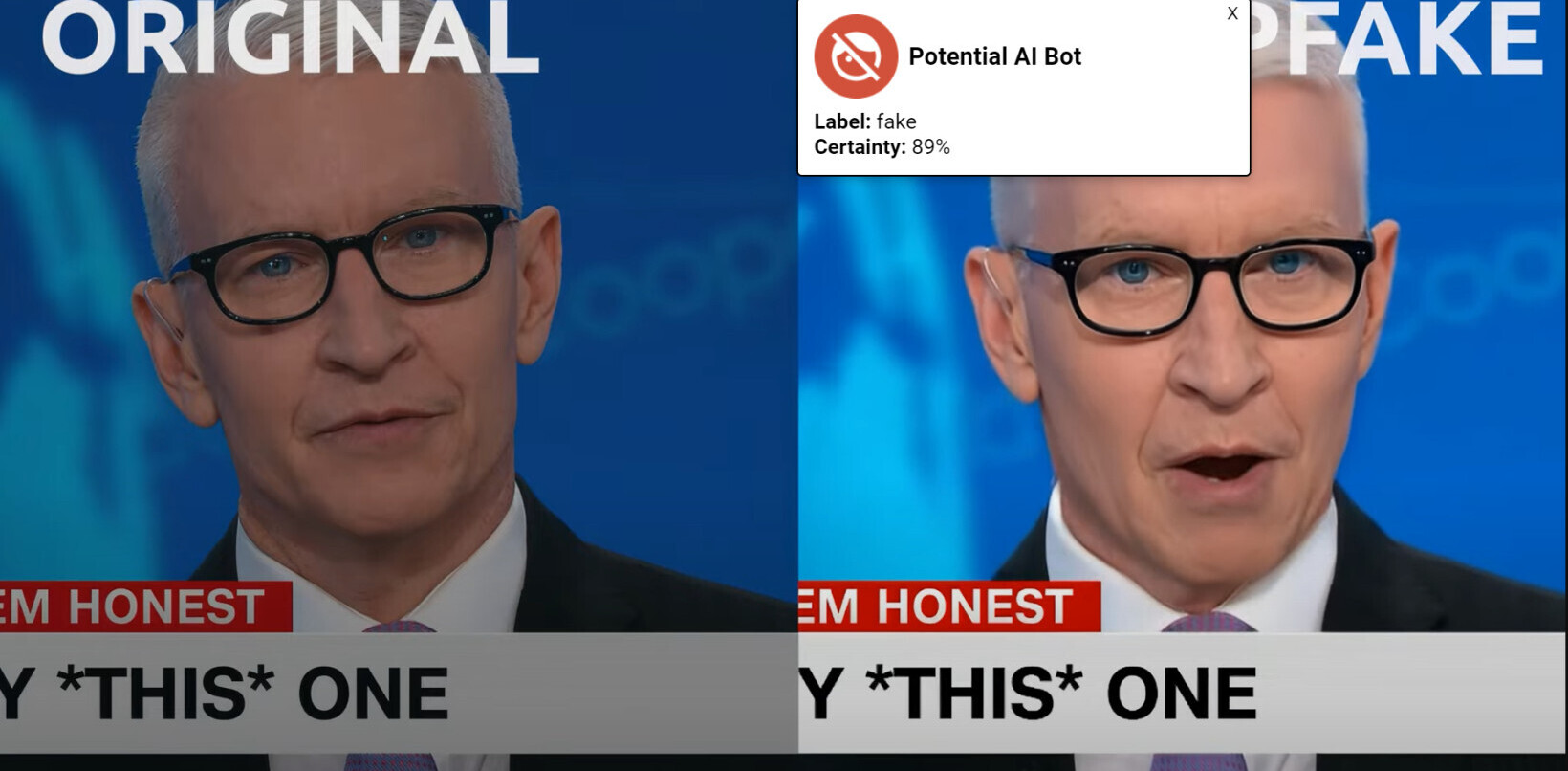

You might not be a wine lover or beer drinker, but that doesn’t mean you’re safe from the reality-distorting effects of AI-generated content.

At this point, can any of us be sure that people aren’t using AI to aggregate breaking news and generate reworded content before passing it off under a human byline?

Is it possible that some of this season’s overused TV tropes and Hollywood plot retreads are the result of a writer’s room using an AI-powered script aggregator to spit out whatever it thinks the market wants?

And, in the text message and dating app-driven world of modern romance, can you ever be truly certain you’re being wooed by a human suitor and not the words of a robotic Cyrano de Bergerac instead?

Not anymore

The answer to all three is: no. And, arguably, this is a bigger problem than plagiarism.

At least there’s a source document when humans plagiarize each other. But when a human passes off a robot’s work as their own, there may be no way for anyone to actually tell.

That doesn’t make the use of AI-generated content inherently unethical. But, without safeguards against such potentially unethical use, we’re as likely to be duped as a college professor who doesn’t do a web search for the text in the essays they’re grading.

Can an AI sommelier be a force for good? Sure. It’s not hard to imagine a website advertising its AI-aggregated reviews as being something similar to a Rotten Tomatoes for smashed grapes and fermented hops. As long as the proprietors were clear that the AI takes human inputs and outputs the most common themes, there’d be little risk of deception.

The researchers state that the antidote to the shady use of AI is transparency. But that just begs another question: who gets to decide how much transparency is necessary when it comes to explaining AI outputs?

Without knowing how many negative reviews or how much unintelligible gibberish an AI generated before it managed to output something useful, is it still trustworthy?

Would you feel the same way about a positive AI review if you knew it was preceded by dozens of negative ones that the humans in charge didn’t show you?

Clearly, there are far more questions than answers when it comes to the ethical use of AI-generated content.

Get the TNW newsletter

Get the most important tech news in your inbox each week.