Recent decisions by Facebook and YouTube to crack down on the far-right conspiracy theory movement known as QAnon will disrupt the ability of dangerous online communities to spread their radical messages, but it won’t stop them completely.

The announcement by Facebook on Oct. 6 to take down any “accounts representing QAnon, even if they contain no violent content,” followed earlier decisions by the social media platform to down-rank QAnon content in Facebook searches. YouTube followed on Oct. 15 with new rules about conspiracy videos, but it stopped short of a complete ban.

This month marks the third anniversary of the movement that started when someone known only as Q posted a series of conspiracy theories on the internet forum 4chan. Q warned of a deep state satanic ring of global elites involved in pedophilia and sex trafficking and asserted that U.S. President Donald Trump was working on a secret plan to take them all down.

QAnon now a global phenomenon

Until this year, most people had never heard of QAnon. But over the course of 2020, the fringe movement has gained widespread traction domestically in the United States and internationally — including a number of Republican politicians who openly campaigned as Q supporters.

I have been researching QAnon for more than two years and its recent evolution has shocked even me.

What most people don’t realize is that QAnon in July and August was a different movement than what QAnon has become in October. I have never seen a movement evolve or radicalize as fast as QAnon — and it’s happening at a time when the socio-political environment globally is much different now than it was in the summer.

[Read: British grannies, please stop with the QAnon memes on Facebook]

All of these factors came into play when Facebook decided to take action against “militarized social movements and QAnon.”

In the weeks leading up to the ban, I had seen a trend in more violent content on Facebook, especially with the circulation of memes and videos promoting “vehicle ramming attacks” with the slogan “all lives splatter” and other racist messages against Black people.

In explaining its ban, Facebook noted while it had “removed QAnon content that celebrates and supports violence, we’ve seen other QAnon content tied to different forms of real-world harm, including recent claims that the (U.S.) West Coast wildfires were started by certain groups, which diverted the attention of local officials from fighting the fires and protecting the public.”

Prior action was ineffective

Prior to the outright ban, Facebook’s earlier attempts to disrupt QAnon groups from organizing on Facebook and Instagram were not enough to stop its fake messages from spreading.

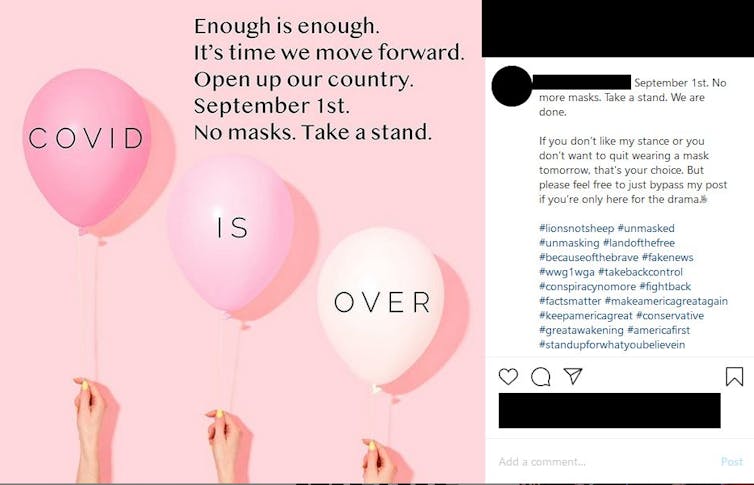

One way Q supporters adapted was through lighter forms of propaganda — something I call Pastel QAnon. As a way to circumvent the initial Facebook sanctions, women who believe in the QAnon conspiracies were using warm and colorful images to spread QAnon theories through health and wellness communities and by infiltrating legitimate charitable campaigns against child trafficking.

The latest move by Facebook will still allow Pastel QAnon to exist in adjacent lifestyle, health, and fitness communities — a softening of the traditionally raw QAnon narratives, but an effective way to spread the conspiracies to new audiences.

Some QAnon pages have survived the ban

Facebook will certainly be monitoring any attempts by the QAnon community to circumvent the ban. And while Facebook’s action reduced the number of QAnon accounts, it didn’t eliminate them completely — and realistically will not. My research shows the following:

- QAnon public groups pre-ban 186; post-ban 18.

- QAnon public pages pre-ban 253; post-ban 66.

- Instagram accounts pre-ban 269; post-ban 111.

Facebook’s actions will do permanent damage to the presence of QAnon on the platform in the long run. Short and medium-term, what we will see are pages and groups reforming and trying to game the Facebook algorithm to see if they can avoid detection.

However, with little presence on Facebook to quickly amplify new pages and groups and the changes to the search algorithm, this will not be as effective as it was in the past.

Where will QAnon followers turn if Facebook is no longer the most effective way to spread its theories? Already, QAnon has further fragmented into communities on Telegram, Parler, MeWe, and Gab. These alternative social media platforms are not as effective for promoting content or merchandise, which will impact grifters who were profiting from QAnon, as well as limit the reach of proselytizers.

But the ban will push those already convinced by QAnon onto platforms where they will interact with more extreme content they may not have found on Facebook. This will radicalize some individuals more than they already are or will accelerate the process for others who may have already been on this path.

Like a religious movement

What we will likely see eventually is the balkanization of the QAnon ideology. It will be important to start considering that QAnon is more than a conspiracy theory but closer to a new religious movement. It will also be important to consider how QAnon has been able to absorb, co-opt, or adapt itself to other ideologies.

Though Facebook has taken this important step, there will be much work ahead to make sure QAnon doesn’t reappear on the platform.

YouTube said its new rules for “managing harmful conspiracy theories” are intended to “curb hate and harassment by removing more conspiracy theory content used to justify real-world violence.”

In the initial wave of takedowns, YouTube shut down the channels of some of the QAnon influencers and proselytizers, in particular Canadian QAnon influencer Amazing Polly and Québec QAnon influencer Alexis Cossette-Trudel. Though this will cut off some of the big influencers, there is more QAnon content on YouTube that falls outside the platform’s new rules.

The new rules will not stop the role YouTube plays in radicalizing individuals into QAnon, nor will it curb those who will radicalize to violence until the platform bans all QAnon content.

Video is the most used medium to circulate QAnon content across digital ecosystems. As long as QAnon still has a home on YouTube, we will continue to see their content on all social media platforms. QAnon will ultimately require a multi-platform effort.

Technology and platforms provide a vector for extremist movements like QAnon. However, at its root, it’s a human issue and the current socio-political environment around the world is fertile for the continued existence and growth of QAnon.

The action by Facebook and YouTube is a step in the right direction, but this is not the end game. There is much work ahead for those working in this space.![]()

This article is republished from The Conversation by Marc-André Argentino, PhD candidate Individualized Program, 2020-2021 Public Scholar, Concordia University under a Creative Commons license. Read the original article.

Get the TNW newsletter

Get the most important tech news in your inbox each week.