An international team of researchers recently conducted a series of experiments to determine if humans are more likely to fire a weapon at a machine that’s racialized as a black robot than one that looks white.

Much like the doll experiments conducted by Kenneth and Mamie Clark in the 1940s, the researchers’ work was designed to determine if socially inherit racial bias exists in human’s perception of an object which, by its very nature, cannot actually have a race.

Unfortunately, while many things have changed since then, some truly awful things haven’t.

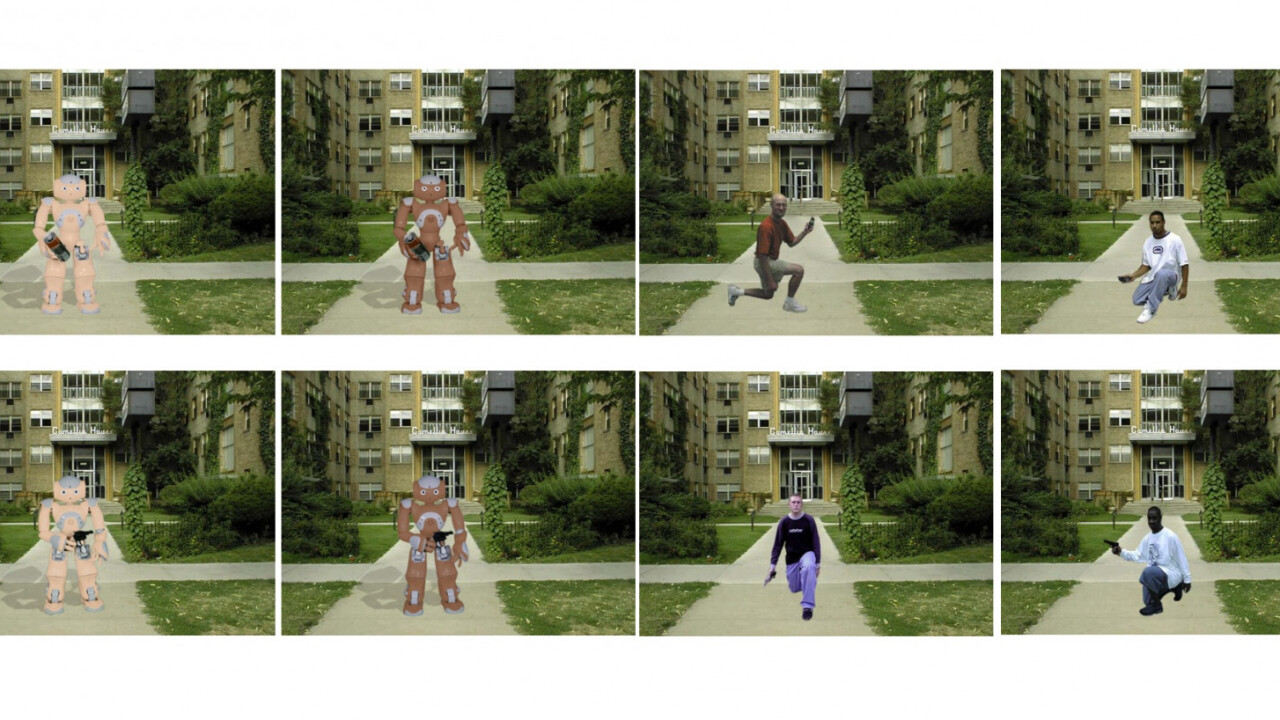

In order to test how people subconsciously view racialized robots, the researchers replicated a different set of experiments designed to determine if humans have a shooting bias towards black people. They did this by presenting people with an image of a robot depicted in different colors representing human skin tones. The robots were then displayed during the experiment in each color both armed and unarmed.

According to the researcher’s white paper:

Reaction-time based measures revealed that participants demonstrated ‘shooter-bias’ toward both Black people and robot racialized as Black. Participants were also willing to attribute a race to the robots depending on their racialization and demonstrated a high degree of inter-subject agreement when it came to these attributions.

Human participants were asked to “shoot” the armed robots while avoiding firing at unarmed ones. Sounds easy enough right? Similar to the original shooting bias experiments, the researchers in this study determined people were more likely to shoot the black robots than the white ones.

In order to make sure the people reporting the race weren’t just doing so because of the context – participants were told they’re playing the role of a police officer whose job is to shoot armed suspects – the team conducted separate research:

…. we conducted another study recruiting a separate sample from Crowdflower. Participants in this new study were only asked to define the race of the robot with several options including “Does not apply”. Data revealed that only 11.3% of participants and 7.5% of participants selected “Does not apply” for the black and white racialized robots, respectively.

The team’s research isn’t perfect, it was conducted using crowd-sourced survey methods including Amazon’s Mechanical Turk – reproducing the results in a laboratory environment is an important next step. But, the results are perfectly in line with previous research, and clearly indicative that the problem of racial bias might be worse than even the most pessimistic experts believe.

Unfortunately, as the researchers put it:

There is a clear sense, then, in which these robots do not have – indeed cannot have – race in the same way had by people. Nevertheless, our studies demonstrated that participants were strongly inclined to attribute race to these robots, as revealed both by their explicit attributions and evidence of shooter bias. The level of agreement amongst participants when it came to their explicit attributions of race was especially striking.

Get the TNW newsletter

Get the most important tech news in your inbox each week.