As we come to the end of 2019, we reflect on a year whose start already saw 100 machine learning papers published a day and its end looks to see a record-breaking funding year for AI.

But the path getting real value from data science and AI can be a long and difficult journey.

To paraphrase Eric Beinhocker from the Institute for New Economic Thinking, there are physical technologies that evolve at the pace of science, and social technologies that evolve at the pace at which humans can change — much slower.

Applied to the domain of data science and AI, the most sophisticated deep learning algorithms or the most robust and scalable real-time streaming data pipelines (‘physical technology’) mean little if decisions are not effectively made, organizational processes actively hinder data science and AI, and AI applications are not adopted due to lack of trust (‘social technology’).

With that in mind, my predictions for 2020 attempt to balance both aspects, with an emphasis on real value for companies, and not just ‘cool things’ for data science teams.

Data science and AI roles continue the trend towards specialization

There is a practical split between ‘engineering-heavy’ data science roles focused on large production systems and the infrastructure and platforms that underpin them (‘Data/ML/AI Engineers’), and ‘science-heavy’ data science role that focus on investigative work and decision support (‘Data Scientists/Business Analytics Professionals/Analytics Consultants’).

The contrasting skill sets, different mental models, and established department structures make this a compelling pattern. The former has a natural affinity with IT and gains prominence as more models move into production. It has also shown to be a viable career transition from software engineering (such as here, here and here). Conversely, the immediacy of decision support and the need to continuously navigate uncertainty require data scientists working in a consulting capacity to be embedded in the business rather than managed via projects.

We continue to quietly move away from the idea of the unicorn because just because someone can do something, does not mean he or she should. For all the value of the multi-talented performer, they are not a comparative advantage when it comes to building and scaling large data science teams.

Executive understanding of data science and AI becomes more important

The realization is dawning that the bottleneck to data science value may not be the technical aspects of data science or AI (gasp!), but the maturity of the actual consumers of data science.

While some technology companies and large corporations have a head start, there is a growing awareness that in-house training programs are often the best way to develop internal maturity. This is due to their ability to customize the content, start from where an organization is at and align training with identifiable company business problems and internal data sets.

End-to-end model management becomes the best practice where production is required

As the actual footprint of data science and AI projects in production gets larger, the problems that need to be solved have coalesced into the discipline of end-to-end model management. This includes deployment and monitoring of models (‘Model Ops’), different tiers of support, and oversight on when to retrain or rebuild models when they naturally entropy over time.

Models Ops and the systems that support the activity is also a distinct skill set that is different from that of data scientists and machine learning engineers, driving the evolution of both these teams and the IT organizations that support them.

Data science and AI ethics continue to gain momentum and are starting to form into a distinct discipline

Second-order effects of automated decision making at scale have always been an issue, but it is finally gaining mind share in the public consciousness. This is courtesy of the prominence of incidents like the Cambridge Analytica Scandal and Amazon scrapping its secret AI recruiting tool that showed bias against women.

The field itself is finding definition around clusters of topics, with activity around automated decision making and when to have a human-in-the-loop, algorithmic bias and fairness, privacy and consent, and longer-term dangers on the path to artificial general intelligence.

Of particular note is the interaction between data science and global privacy regulations. GDPR has been in effect as of mid-2018, and there are no limits on data processing and profiling, requirements of model transparency and the possibility of organizations that data scientists work for being held accountable for adverse consequences.

Technology usually outpaces regulatory paradigms by a few years, but regulation is catching up. This will cause short-term pain as data science and AI teams learn to work within new constraints, but will eventually lead to long term gain as credible players are separated from bad actors.

The convergence of tools causes confusion

This is due to multiple ways to do the same task, with different groups preferring different approaches depending on their background. This will likely continue to cause confusion as newer entrants to the industry may only see a part of the whole.

Today, you may model enterprise tools if you work for large organizations that can afford them. You may model in a database environment if you are a DBA with MS SQL Server. You could call machine learning APIs and develop an ‘AI product’ if you are a software engineer. You could build and deploy the same model on cloud platforms such as AWS Sagemaker or Azure ML Studio if you have familiarity with cloud offerings. And the list goes on.

The net result may be fertile ground for misunderstanding and turf wars due to similar functionality being available in different forms. Against this landscape, the organizations that are able to build high levels of trust across disparate technical teams will be the ones who reap the full benefits of the toolkit available today.

Efforts to ‘democratize’ and ‘automate’ data science and AI redouble, with parties that over-promise failing

With talent being somewhat elusive (or at least misallocated), automated data science and AI is an attractive idea. However, the reality remains that the boundaries of technology only enable certain well-specified tasks to be automated.

Taking a typical data science project, there is a lot that goes on around the activity of model building:

- Choosing the right project, putting together a team with the right mix of skills, communicating the approach, and securing necessary support and money if necessary.

- Once the project is set to start, selecting how to frame the problem and the approach to take. E.g. should failure prediction be framed as a supervised or unsupervised machine learning problem? Or a system to be subject to simulation? Or an anomaly detection problem?

- Once you have framed the problem, choosing the right data to use, and choosing the right data not to use — e.g. due to ethical considerations.

- Processing on the data side to make sure it will not result in an erroneous model. For example, email data actually requires a lot of wrangling to get at the actual message among the headers, tags etc.

- Once you have the data, generating hypotheses — e.g. in data mining in massive data sets, a lot of work is about deciding what ideas might be worth investigating before going to ‘do the data science’.

- Build and optimize the model. <This is what is being automated>

- Once you have built and optimized your models (if you chose to use models at all), deciding on whether it is valuable or not.

- Once you have decided that the work is worthwhile, embedding developed machine learning models into a production system and an established business process. This step alone often takes more time than all the other steps combined.

- Once the model is deployed, building out future releases to ensure that what is built is fully functioning, tested, and integrated with other systems.

- Once the entire machine learning system is well tested and performing up to engineering standards, actually interpreting and acting on the output from a data science project.

Just as Wix, Squarespace, and other website builders did not put web developers out of business, AutoML and DataRobot will not replace data scientists. (They are, however, great tools, and should be marketed as such.)

Architecture at the Edge and Fog starts to enter the mainstream

The practical necessity and engineering cost of deploying increasingly large sophisticated models is driving new architecture patterns. This is especially true for both compute and data transfer requirements of real-time video analytics, being lauded as the ‘killer app’ for edge analytics. The trend is being supported by both advances in computer vision and new purpose-built commercial hardware such as the AWS Deeplens.

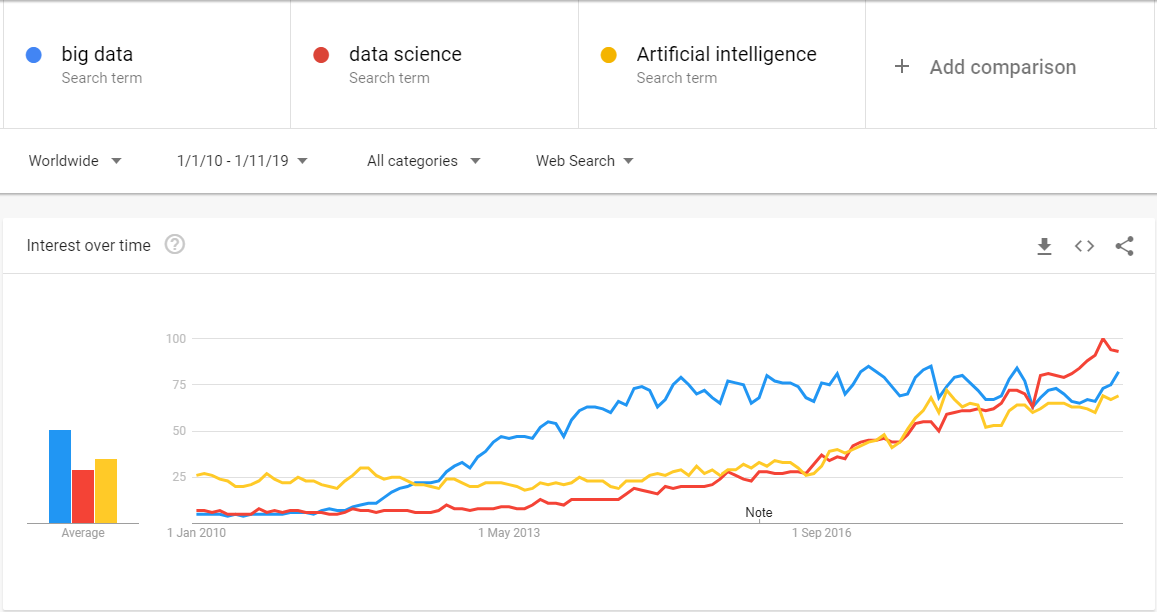

The hype cycle and deluge of definitions are shifting

It was first focused on “big data,” before moving to “data science” some 5–6 years ago, and 2020 may be the year that all things “AI” could overtake the conversation.

One of the side effects of having to onboard large numbers of newcomers is a simplification of the field, which in the case of data science was reducing it to emphasize more on statistics and machine learning while de-emphasizing other mathematical modeling disciplines such as operations research and simulation.

A similar pattern has started to occur in AI, with the analogous emphasis on machine learning, neural networks, and deep learning, often in the context of vision and natural language processing. The de-emphasis currently seems to occur in classical AI fields such as knowledge representation, expert systems, and planning, among others.

As a side note, I completely empathize that it is difficult to move to a new field, and the breadth of data science and AI can be overwhelming. What I have found most useful in breaking this wall is seldom more content, it is better navigation. Having someone who can orientate what we know and do not know, and draw a personal roadmap of learning is far more useful than an unordered list of links to learning materials.

Competition enters the AI chip market

Nvidia has had a tremendous head start in the market for hardware for deep learning, and currently dominates most of AI in the cloud. While there are significant entrants from Google, Qualcomm, Amazon, Xilinx, and multiple startups, competition is still mostly occurring on the margins.

This will eventually change as powering AI is not about ‘just a chip’, but about complete, portable hardware platforms, preferably without vendor lock-in. Intel and Facebook’s new chip may be the awaited competition, or it could come from Chinese companies rushing to make their own chips as trade war bites. Almost on cue, the second half to 2019 saw Alibaba and Huawei both unveil chips.

It is still easier to teach data science and AI and sell tools than to actually make it work in practice

Creating value from data science and AI is not only hard but require discussion and consensus beyond the data scientist and machine learning engineer alike.

AI systems are often, at their core, optimization machines. And the question we have only started to ask is “what are we optimizing for?” For all the attention given to “doing things right” in data, modeling, and architecture, the arguably harder task is “doing right things” in terms of designing for human-centric experiences and values.

Likewise, data-driven decisions need to be taken by senior, non-technical decision-makers, often tangled in complex webs of political intrigue, and have often succeeded their entire careers without data science.

On the production front, successful model deployment is but one small part of a product and can be constrained by a myriad of factors ranging from internal IT environments to archaic regulatory requirements, and all this on top of the inherent uncertainty of working with data. The obsession with ‘models in production’ themselves may also be somewhat misguided, and one of the primary KPIs of data science remains its most elusive — “did you change a mind?”

This article was written by Jason T Widjaja and originally published at Towardsdatascience.

Get the TNW newsletter

Get the most important tech news in your inbox each week.