Over the last several months, YouTube has reeled from a wave of criticism. It has been accused of radicalizing young voters, ignoring harassment of LGBT content creators, and most importantly not doing enough to address toxic videos on the platform. Now it’s got a new problem: the YouTube Kids app.

According to a new report published by Bloomberg’s Mark Bergen and Lucas Shaw, the Google-owned company is painfully aware its kid-friendly alternative isn’t appealing to children, and that it’s struggling to make it safe for the few kids that end up using it.

Those who do watch YouTube kids don’t stick around for long either, the report says, instead shifting over to YouTube’s main site as soon as they turn 13-years old.

“One person who works on the app said the departures typically happen around age seven. In India, YouTube’s biggest market by volume, usage of the Kids app is negligible, according to this employee,” the report states.

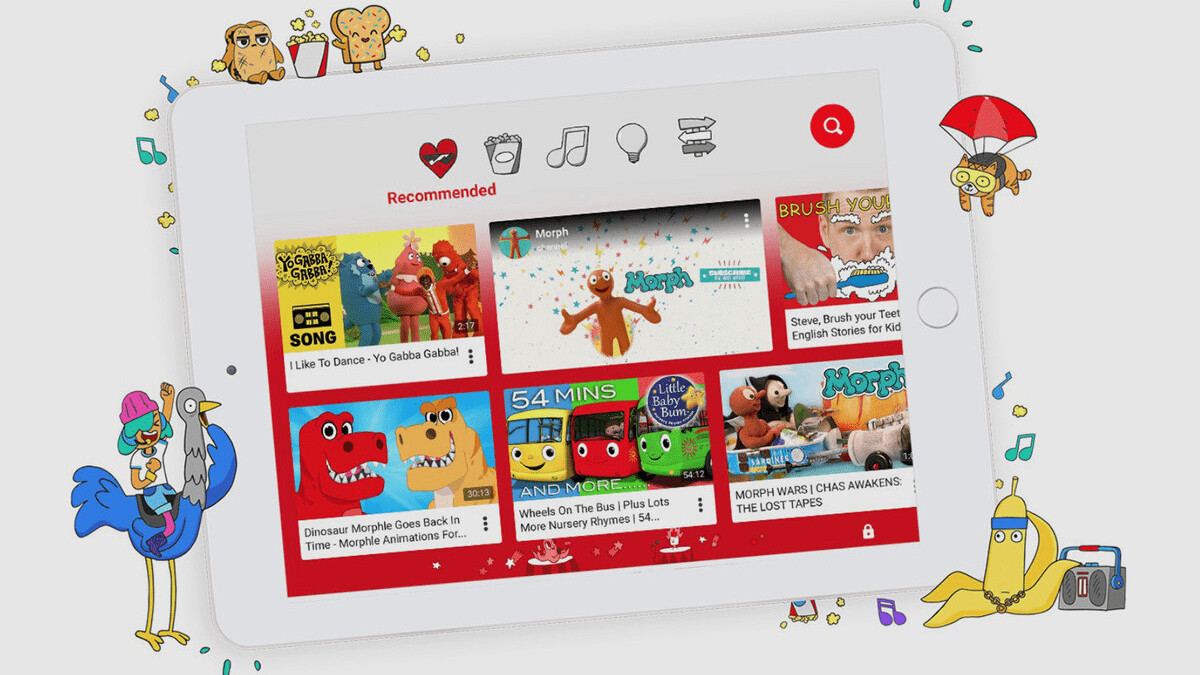

YouTube Kids has been marketed an app “made for curious little minds,” but it has been previously found to surface inappropriate content, including explicit sexual language and jokes about pedophilia.

The app’s video recommendation system has also made it easy to automatically steer users to disturbing material, intentionally or otherwise.

Currently the app allows parents to report an inappropriate video, or customize kids’ viewing experience by adding or blocking channels they don’t want their children to watch.

After revelations that comment sections were full of viewers time stamping specific scenes that sexualize the child or children in the video, the company turned off comments on videos that feature minors, and disabled live-streaming options for kids unless a parent or guardian is present. But all these measures can only go so far.

Earlier this year, Bloomberg disclosed how employees’ proposals to tweak YouTube’s recommendation algorithms to weed out borderline content and curb conspiracies were sacrificed for the sake of driving user engagement, leading ex-employees to brand it “toxic.”

While some of YouTube’s problems are largely symptomatic of the larger trend that pervades recommendation-based social networks like Facebook, Twitter, and Instagram, suggesting inappropriate content is a deal-breaker for parents who expect the app to be filled with nothing but millions of hours of cartoons, nursery videos, and other kid-friendly videos.

There’s no doubt YouTube needs to clean up its toxic recommendation system. But if hand-picking every video that appears in the Kids app isn’t working in its favor — kids between seven and 12 reportedly grew bored of the limited library and went to surf regular YouTube — it needs to do more to filter out inappropriate content, or deliver relevant warnings when they go down the rabbit hole of “Up next” YouTube recommendations.

Ultimately, it also puts the onus on parents to monitor their children’s online activities more closely. If that means limiting use of YouTube to specific channels, or restricting the amount of time kids spend online, so be it.

You can read the entire story by Mark Bergen and Lucas Shaw on Bloomberg here.

Get the TNW newsletter

Get the most important tech news in your inbox each week.